The results you display might indicate no significant result at all. With interactions up to fourth order, your "4 predictors" end up being more than that. Your model shows 8 regression coefficients beyond the intercept (from the perspective of overfitting, that's already 8 effective predictors). Although one coefficient beyond the intercept is nominally "significant," the overall distribution of p-values among coefficients doesn't seem that far from the uniform distribution you would get if there were no real non-zero coefficients.

Furthermore, your coding has suppressed individual coefficients for several predictors, which is typically not a good thing to do. A model with all individual coefficients and interactions would have many more. There's a big risk in just plugging in all high level interactions with a small data set: it might make it hard to find important true relationships as the "significance" reports necessarily take the number of estimated coefficients into account. Plus, it's really hard to wrap your mind around a 3-way interaction, let alone a 4-way interaction.

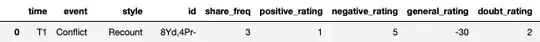

You have to decide what's most important to glean from your data. For example, it seems that your main interest is in the emotion-rating difference between the 2 time points, given the other predictors. In that case, you could simplify your model by just using the emotion-rating difference for each participant as the outcome, similar to paired t-tests. Then, with only 1 effective outcome per participant, you wouldn't need a mixed model.

You also have to decide how many interactions it makes sense to evaluate. If you use paired differences to remove time as a predictor, you still have a binary event, a binary style, and a continuous doubt_rating predictor. If you treat the continuous predictor linearly, that gives you 3 individual coefficients, 3 two-way interactions, and a 3-way interaction, for a total of 7 predictors. A rule of thumb for studies like this is that you can handle about 1 coefficient estimate per 10-20 observations without overfitting. Although you would have cut your number of effective observations from 320 to 160 by using paired differences, you would still be in a useful case/coefficient range.

To answer the specific question about how to test for overfitting: a good way is to use bootstrap validation of your model building process. The "optimism bootstrap" repeats the modeling on multiple bootstrapped samples of your data, evaluates each model on the full data set, and calculates how much better each model fits its corresponding bootstrap sample than it does the full data set (the "optimism" of each model). The averaged optimism among those models estimates the optimism in applying the full model to the underlying population from which you took the original data sample. An overfit model will be highly "optimistic" in that sense. See Chapter 7 of Elements of Statistical Learning, especially Section 7.4.

A final warning: do not rely solely on model summaries like the one you show when there are interactions, particularly interactions with continuous predictors whose values are far from 0. With standard effect/dummy coding, the coefficient estimate (and the "significance" of its difference from 0) for a predictor involved in an interaction (or for an interaction term that has higher-level interactions) is for the situation when all the interacting predictors are at reference levels (categorical interactors) or 0 (continuous interactors). That might not represent any real scenario consistent with your data. Instead, examine and compare predictions based on realistic combinations of predictor-variable values.