Why are the "Loss Functions" being Optimized in most Statistical/Machine Learning Problems usually "Quadratic"?

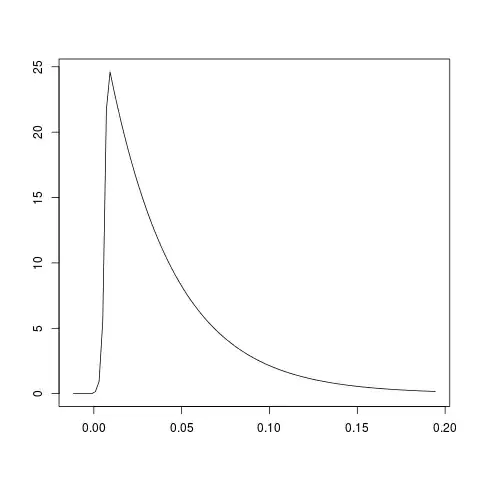

Using very basic logic, in statistics/machine learning we are trying to minimize the "error" between a model and some "hypothetical ideal function" that perfectly models the data. This "error" is typically described as "Mean SQUARED Error" (MSE) - and the function characterizing this error is what we are trying to minimize. "Squared" is said to be the main characteristic of "quadratic functions" (i.e. the highest power term in a quadratic function is "power 2", i.e. squared). I have heard the reason that loss functions are typically quadratic is because quadratic functions have certain "attractive and desirable theoretical properties" that facilitate convergence for such tasks as root finding and minimization - but I am not sure about this and what these properties are:

Thus - no matter how many parameters in the loss function being optimized (e.g. neural networks with many weights/layers), and no matter how complex the behavior of the loss function being optimized : Is it safe to assume that the loss functions being optimized in most statistical/machine learning problems are usually "quadratic"? And does anyone know why this is?

Can someone please comment on this?

Thanks!

Note : I have heard similar arguments being made in reference to describing the "non-convexity" of loss functions being optimized in most statistical/machine learning problems. Although there are standard definitions in mathematics used to determine whether a function is convex or non-convex (e.g. https://en.wikipedia.org/wiki/Convex_function, "Definitions"), high dimensional loss functions containing "random variables" (opposed to non-random deterministic functions in classical analysis, i.e. "noisy") are almost always said to be non-convex. This has always made me wonder that even though optimization algorithms like Gradient Descent were designed for convex and non-noisy functions - they are still somehow able to display remarkable success when optimizing non-convex and noisy functions.