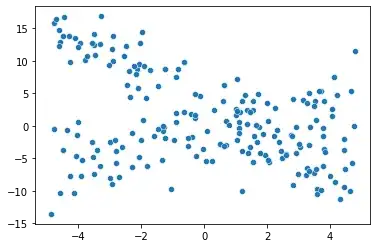

Assume you are provided with data derived from two linear equations + noise, such as that below.

How would you go about deconvoluting the lines, and obtaining their slopes/biases?

Colab link for problem setup, but as a reminder, I'm trying to go in the reverse direction (from convoluted data back to equations). Thanks in advance!