I already referred the posts here, here, here, here, here etc. Don't mark as duplicate please.

I have a dataset with 1008 rows with 16 input variables and 1 target variable.

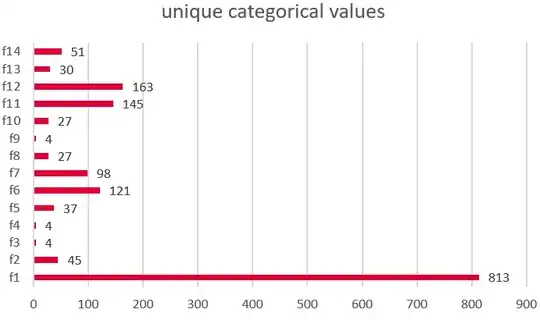

However, 14 of my input variables are categorical in nature and the number of unique categorical value that each feature contains is shown below

My questions are as follows

a) My problem can be solved by using traditional classification technique like logistic regression, random forests, decision trees etc. can these algos work if I am gonna one-hot encode my 14 input features? I suggest one-hot encode because my categorical input variables and their values doesn't have any inherent order/rank to them. So, basically they are like product_ids, part_ids, market_segment etc. So, there is no order but the cardinality is high

can traditional algos work with such large feature space (if I one-hot encode). Our objective is to predict which product (id) with which part id from which region sold by which seller failed? here, product is f1, part is f2, region is f3, seller is f4 etc.

b) Is there any other better way/alternative suggestion to do this? I ask because I understand my dataset size is only 1008 rows. So,we have to make sure that it doesn't overfit.

c) I will drop correlated input features to reduce no of input variables. Is there any other suggestions/help please?

d) Though dataset is small, we are working on extracting more data. So, we may have more data point in future as well. But for now, we have only 1006 rows and how best we can get our methodology right now. So, I can use it for future data points