If you have $n=25$ and observed number of successes

$x = 15,$ then you can test $H_0: p=0.5$ against $H_a: p\ne 0.5$ at the 5% level and you can

find a 95% confidence interval for $p.$

In R, the procedure binom.test provides both the

P-value of the test and a 95% confidence interval.

Specifically, the P-value $0.4244 > 0.05 = 5\%$

indicates that the observed $\hat p = x/n = 0.6$

is not significantly different from $p_0 = 0.5$ at the 5% level. Accordingly, the 95% CI $(0.387, 0.789)$ contains $p_0 = 0.5.$

binom.test(15, 25, p=.5)

Exact binomial test

data: 15 and 25

number of successes = 15, number of trials = 25,

p-value = 0.4244

alternative hypothesis:

true probability of success is not equal to 0.5

95 percent confidence interval:

0.3866535 0.7887452

sample estimates:

probability of success

0.6

By contrast, if you had more trials, say $n = 100$ with $x = 62$ successes, then you

could use an approximate normal test and confidence interval. [The chi-squared statistic shown in the printout below is the square of a normal test statistic.]

In this case, the null hypothesis $H_0: p = 0.05$ is rejected at the 2% level (thus also at the 5% level) in favor of the

alternative $H_a: p\ne 0.5$ and a 95% confidence

interval for $p$ is $(0.522, 0.709).$

prop.test(62, 100, p=.5, cor=F)

1-sample proportions test

without continuity correction

data: 62 out of 100, null probability 0.5

X-squared = 5.76, df = 1,

p-value = 0.0164 # aprx P-val

alternative hypothesis:

true p is not equal to 0.5

95 percent confidence interval:

0.5220976 0.7090240 # aprx 95% CI

sample estimates:

p

0.62

Notes: (1) In the second example, binom.test is still valid (as shown below). However, in elementary statistics books, you may find more

refences to approximate normal tests and CIs.

binom.test(62, 100, p=.5)$p.val

[1] 0.02097874 # exact P-value

binom.test(62, 100, p=.5)$conf.int

[1] 0.5174607 0.7152325 # exact 95% CI

attr(,"conf.level")

[1] 0.95

In R, notation with $ permits showing just

part of the output.

(2) @Sycorax (+1) has shown the $\hat p = x/n$ is the method-of-moments estimator (MME) of $p.$

In addition. $\hat p = x/n$ is the maximum likelihood estimator (MLE) of $p.$

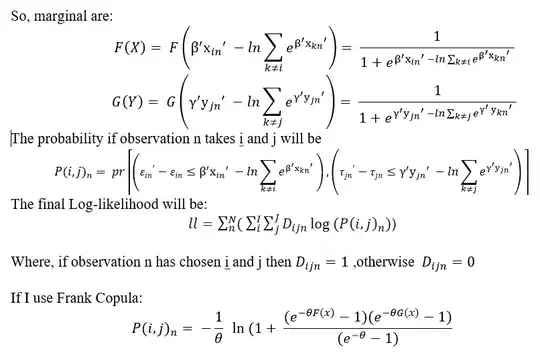

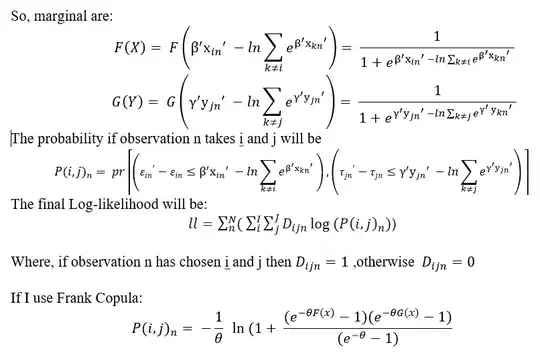

You can find the MLE by finding the value $p$ that maximizes the binomial likelihood

function, which is the binomial PDF viewed

as a function of $p$ for known $x.$

The

maximum can be found by the usual methods

of calculus. Below is a graph of a numerical solution,

in which a 'grid search' is used to find

p for which like is maximized. For $n=100, x = 62,$ the MLE is $\hat p = x/n = 0.62.$

p= seq(.1, .99, by = .001)

like = dbinom(62, 100, p)

mle = p[like==max(like)]; mle

[1] 0.62

The following plot illustrates the likelihood function and its maximum.

hdr = "Likelihood Function Showing LME"

plot(p, like, type="l", lwd=2,

ylab="Likelihood", xlab="x", main=hdr)

abline(v = mle, col="red")

abline(h=0, col="green2")

(3) Estimation of $n$ is not discussed in

this answer. Of course. if you have the data, then you can count the observations to find $n$ exactly for that sample. The difficult problem is to estimate $n$ more generally.

Perhaps see this Q&A and Google estimate binomial sample size for other references. including this preprint

and its References.