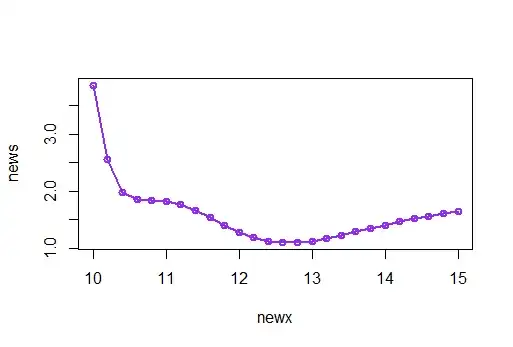

I am working on a neural network model to predict natural gas future price after 2020 using pre-pandemic data. The results seems impressive. But the learning process seems questionable. the error usually stopped to improve since 20 runs(epoch) even I set epochs = 2000. Change to adam does not help either [ ][

][ ]

]

NN_model = Sequential()

NN_model.add(Dense(32, kernel_initializer='normal',input_dim = train_data.shape2,activation='elu'))

NN_model.add(Dense(128, kernel_initializer='normal',activation='elu'))

NN_model.add(Dense(64, kernel_initializer='normal',activation='elu'))

NN_model.add(Dense(16, kernel_initializer='normal',activation='elu'))

NN_model.add(Dense(1, kernel_initializer='normal',activation='linear'))

NN_model.compile(loss='mean_absolute_error', optimizer='rmsprop', metrics=['mean_absolute_error'])

NN_model.summary()

checkpoint_name = 'Weights-{epoch:03d}--{val_loss:.5f}.hdf5'

checkpoint_path = os.path.join('models/', checkpoint_name)

checkpoint = ModelCheckpoint(checkpoint_path, monitor='val_loss', verbose = 1, save_best_only = True, mode ='auto')

callbacks_list = [checkpoint]

history = NN_model.fit(train_data, target, epochs=2000, batch_size=64, validation_split = 0.2, callbacks=callbacks_list).history

plot_loss(history)