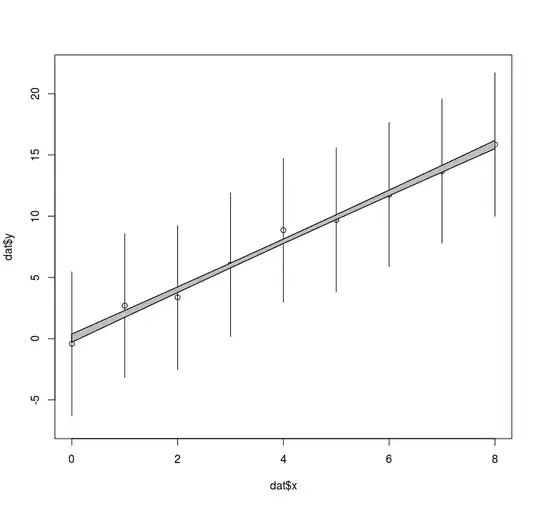

I have a dataset in which the dependent variable (y) has known and substantial error, and yet the observations happen to line up quite well along a line when plotted against the independent variable (x). Fitting a linear regression seems to substantially overestimate the precision of the slope estimate for y vs. x.

How can one appropriately propagate the known error in y through to the estimate of the slope?

I think there is part of an answer here, but it assumes the point fit a linear regression exactly: Calculate uncertainty of linear regression slope based on data uncertainty

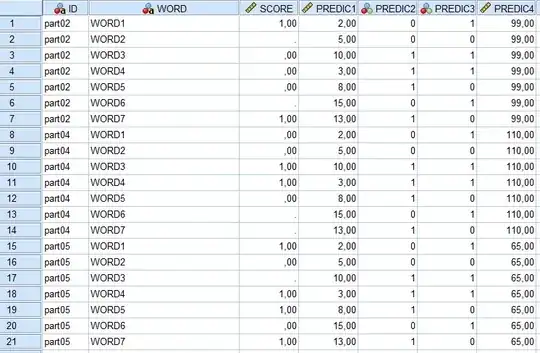

As a reproducible example in R:

# the data

set.seed(5)

dat <- data.frame(x = 0:8, y = seq(0,16, length.out=9)+rnorm(9, 0, 0.5), y.se = 3)

# fit a naive model, not considering error in y

mod <- lm(y ~ x, dat)

summary(mod)

preds <- predict(mod, se.fit = TRUE)

plot(dat$x, dat$y, ylim=c(-7,22))

arrows(dat$x, dat$y-1.96*dat$y.se, dat$x, dat$y+1.96*dat$y.se, length=0)

# plot the confidence interval on the linear regression

polygon(c(dat$x, rev(dat$x)), c(preds$fit+preds$se.fit, rev(preds$fit-preds$se.fit)), col = 'grey')

The slope is estimated very precisely near 2.0:

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.04670 0.32783 0.142 0.891

x 1.97546 0.06886 28.689 1.61e-08 ***

Visually, however, a slope as low as 0.7 or as high as 3.3 would still fit through the error bounds of y quite well.