Notice what they say

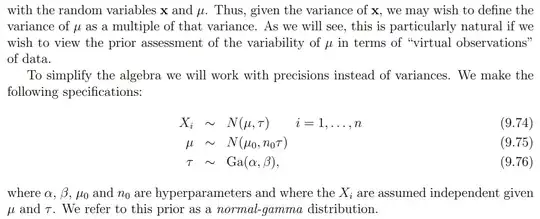

To simplify the algebra we will work with precisions instead of variances. ... in summary, by starting with a normal-gamma prior, we obtain a normal-gamma posterior; i.e., we have found a conjugate prior for the mean and precision of the Gaussian.

They parametrize the Gaussian by mean and precision $\tau$, where the variance would be inverse of the precision. Gathering more data leads to more precision. Higher precision = lower variance, so everything is as expected.

The "virtual observations" are most easily explained using beta-binomial, or Dirichlet-categorical models, where the prior parameters can be thought as counts of "successes" observed a priori. With other models, this intuition may be harder to gasp.