I want to train a classifier that helps sorting out a large directory off fonts. I know that I could do a analysis on the font name and the contents of the TTF and OTF files, but for educational reasons I want to do it with machine learning.

For every font I rendered a sample image that helps me to decide if I want to uses the file in other projects. That means I have two classes 'yes' and 'no'. I also create manually labels for 4389 images (384x384). The images was chosen by random.

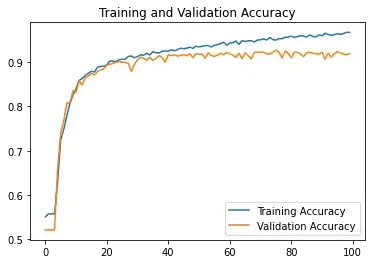

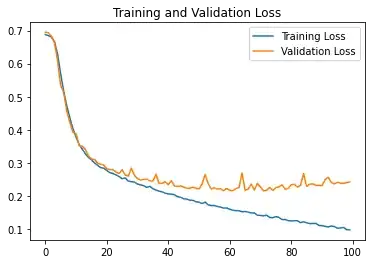

Actually I test a network similar to VGG16 and I get an accuracy of ~90%:

Model: "sequential_2"

_________________________________________________________________

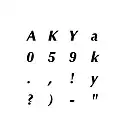

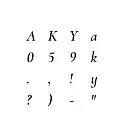

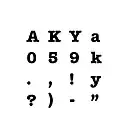

Layer (type) Output Shape Param #

=================================================================

rescaling_2 (Rescaling) (None, 384, 384, 1) 0

conv2d_2 (Conv2D) (None, 384, 384, 64) 640

conv2d_3 (Conv2D) (None, 384, 384, 64) 102464

max_pooling2d_2 (MaxPooling (None, 192, 192, 64) 0

2D)

conv2d_4 (Conv2D) (None, 192, 192, 128) 73856

conv2d_5 (Conv2D) (None, 192, 192, 128) 147584

max_pooling2d_3 (MaxPooling (None, 96, 96, 128) 0

2D)

conv2d_6 (Conv2D) (None, 96, 96, 256) 295168

conv2d_7 (Conv2D) (None, 96, 96, 256) 590080

max_pooling2d_4 (MaxPooling (None, 48, 48, 256) 0

2D)

conv2d_8 (Conv2D) (None, 48, 48, 512) 1180160

max_pooling2d_5 (MaxPooling (None, 24, 24, 512) 0

2D)

conv2d_9 (Conv2D) (None, 24, 24, 512) 2359808

max_pooling2d_6 (MaxPooling (None, 12, 12, 512) 0

2D)

flatten_2 (Flatten) (None, 73728) 0

dense_12 (Dense) (None, 1024) 75498496

dense_13 (Dense) (None, 1024) 1049600

dense_14 (Dense) (None, 2) 2050

=================================================================

Total params: 81,299,906

Trainable params: 81,299,906

Non-trainable params: 0

_________________________________________________________________

And the statement to compile the network is:

model.compile(

optimizer=tf.keras.optimizers.Adam(

learning_rate=0.000001, beta_1=0.9, beta_2=0.999, epsilon=1e-07

),

loss=tf.keras.losses.SparseCategoricalCrossentropy(),

metrics=['accuracy']

)

The labeled images are split to 80/20 for training/test.

I think training is done at step 20. When I uses the network to label 1000 random new samples, I have 38 wrong predictions. That is an accuracy of 96%. I do not understand why the difference to the metric (90%) is so high.

Question:

What can I change to come to a higher accuracy? 90% helps a lot to label new samples, but it is still time consuming.