I tested the response of a weed's biomass (dry weight- DW) to a herbicide sprayed at three different phenological stages (4-6, 6-8 and 8-10 true leaves). The experimental design also included four different populations of this weed (biotypes collected from different geographical regions).

Here is the structure of my data, It’s a relatively small data set with 5 replications for each combination:

str(data)

tibble [120 x 4] (S3: tbl_df/tbl/data.frame)

$ population: Factor w/ 4 levels "A","B","C","D": 1 1 1 1 1 2 2 2 2 2 ...

$ pheno : Factor w/ 3 levels "4_6","6_8","8_10": 1 1 1 1 1 1 1 1 1 1 ...

$ treatment : Factor w/ 2 levels "Control","Herbicide": 2 2 2 2 2 2 2 2 2 2 ...

$ DW : num [1:120] 0.69 0.09 0.89 0.84 0.92 0.29 0.6 0.85 0.38 0.15 ...

The response variable DW is considered normally distributed usually, and initially I thought about running a simple LM. However, for the youngest phenological stage, 4-6 TL, the herbicide injured the plants, especially for one of the populations (D) which is more sensitive. When I run shapiro.test() for each phenological stage across population for the no-spray control, the DW is normally distributed and the same for the less sensitive pheno stages 6-8 and 8-10 that were sprayed with the herbicide. But for the herbicide-treated 4-6 stage the p.value is 0.0018.

I'm not a statistician, and trying to grasp the idea of conditional distribution and GLM for a while now. Somebody suggested I run a GAM, but I read this post saying that a categorical-variables-only gam is essentially a GLM. Is it ok to run LM even though not all of the conditional distributions are normally distributed? Or should I run a GAM? or GLM with family=gaussian? There is a strong possibility I misunderstood the hole conditional distribution matter altogether.. And if this is the case I would appreciate some direction to relevant sources of information!

--Edit--

Given the much appreciated comments, I would like to add a follow-up question. Fitting a GLM with family=Gamma(link="log") does seem more reasonable, and that is what I did:

Call:

glm(formula = DW ~ pheno * treatment + population, family = Gamma(link = "log"),

data = data)

Deviance Residuals:

Min 1Q Median 3Q Max

-2.53916 -0.21793 -0.02238 0.18246 0.93584

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -0.347274 0.122428 -2.837 0.005422 **

pheno6_8 0.373920 0.141367 2.645 0.009353 **

pheno8_10 0.494900 0.141367 3.501 0.000669 ***

treatmentHerbicide -0.538135 0.141367 -3.807 0.000231 ***

populationB -0.087105 0.115426 -0.755 0.452061

populationC 0.006129 0.115426 0.053 0.957750

populationD -0.427240 0.115426 -3.701 0.000335 ***

pheno6_8:treatmentHerbicide 0.702339 0.199923 3.513 0.000642 ***

pheno8_10:treatmentHerbicide 0.736103 0.199923 3.682 0.000359 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

(Dispersion parameter for Gamma family taken to be 0.1998466)

Null deviance: 64.538 on 119 degrees of freedom

Residual deviance: 45.280 on 111 degrees of freedom

AIC: 154.45

Number of Fisher Scoring iterations: 9

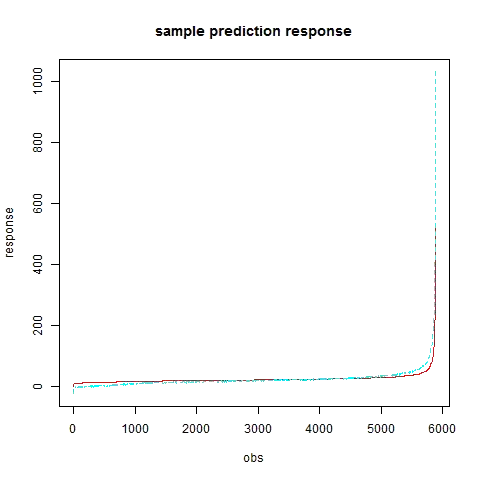

Here are some diagnostic plots:

However, when I fit a GLM with family=gaussian(link="identity") (which is essentially a LM?) the fit appears to be much better:

Call:

glm(formula = DW ~ pheno * treatment + population, family = gaussian(),

data = data)

Deviance Residuals:

Min 1Q Median 3Q Max

-0.50146 -0.14168 -0.02424 0.11672 0.54109

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.65541 0.06433 10.187 < 2e-16 ***

pheno6_8 0.30100 0.07429 4.052 9.45e-05 ***

pheno8_10 0.40600 0.07429 5.465 2.87e-07 ***

treatmentHerbicide -0.20995 0.07429 -2.826 0.005588 **

populationB -0.03333 0.06066 -0.550 0.583729

populationC 0.06600 0.06066 1.088 0.278901

populationD -0.20830 0.06066 -3.434 0.000837 ***

pheno6_8:treatmentHerbicide 0.35795 0.10506 3.407 0.000915 ***

pheno8_10:treatmentHerbicide 0.43745 0.10506 4.164 6.21e-05 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

(Dispersion parameter for gaussian family taken to be 0.05518593)

Null deviance: 17.0922 on 119 degrees of freedom

Residual deviance: 6.1256 on 111 degrees of freedom

AIC: 3.5442

Number of Fisher Scoring iterations: 2

And the same plots for the second model:

Both models are not without problems, especially for those plants of the sensitive population which all died (and resulted in a near-zero DW and small variance), but judging by what I know: lower AIC, the deviance of the residuals, the diagnostic plots.. normal approximation works better. Why is this happening? And which is the correct choice (if any)?