Assume that we have two classes, $X$ and $Y$ and we find the mean $X_\mu$ and $Y_\mu$ and variance $X_\sigma$ and $Y_\sigma$.

With that, we could use linear discriminant analysis to expend the distanse between $X$ and $Y$.

But when I look at the images of linear discriminant analysis, it seems only that the data has been "rotated".

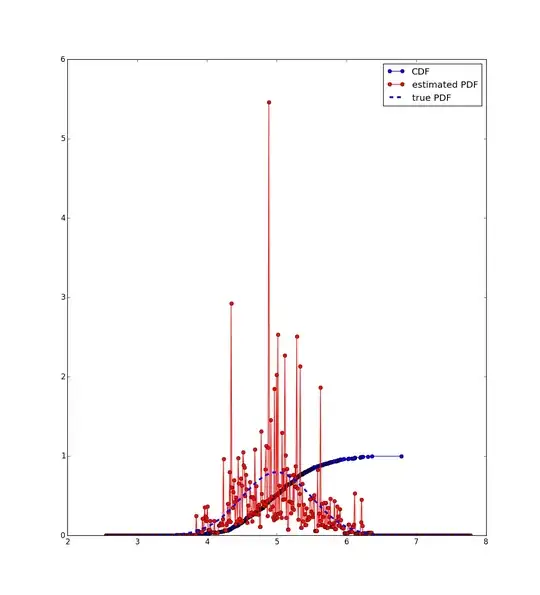

Here is a good example how to interpret linear discriminant analysis, where one axis is the mean and the other one is the variance.

Question:

What's the idea of using linear discriminant analysis if I already know the average mean and variance of each class?

I mean, if I have three classes, X, Y, Z and I know the average mean of them and the average variance. Then I get a unknown class U. If I want to find out which class U belongs to, I could just use a simple pythagorean theorem. I don't need to rotate the data.

It seems that linear discriminant analysis only rotates the data. I don't find the use case in linear discriminant analysis.

Or could it be that linear discriminant analysis also expands the distance between the classes too?