Suppose $X,Y,Z\sim N(0,\sigma^2)$. $X$ is independent of $Y,$ $X$ is independent of $Z$ (but $Y$ and $Z$ are not independent), is $X$ independent of $\max(Y,Z)$?

-

2If the normality is of the marginal distributions rather than the joint, X can be independent of Y and independent of Z but might not be independent of (Y,Z) – Glen_b Dec 02 '21 at 09:21

1 Answers

If $X$ were not independent of $\max(Y,Z),$ that would seem to contradict a basic theorem that when two variables $U$ and $V$ are independent, then measurable functions of them $f(U),$ $g(V)$ are necessarily independent. That applies here to $U=X$ and $V=(Y,Z)$ with $f$ the identity and $g$ the maximum function. I suppose such a counterexample, if it exists, must involve a case where $X$ is not independent of $(Y,Z)$ even though $(X,Y)$ and $(X,Z)$ are independent. There is a standard example of that, which I will briefly describe.

Let $(U,V,W)$ be independent standard Normal variables. Define $S$ to be the sign of $UVW:$ that is, $S=1$ when $UVW \ge 0$ and otherwise $S=-1.$ Set $(X,Y,Z)=(SU,SV,SW).$ This guarantees $XYZ\ge 0,$ as you can readily check.

It is straightforward to check that all three pairs $(X,Y),$ $(X,Z),$ and $(Y,Z)$ have standard binormal distributions: in particular, all three pairs are independent. However, consider the case $\max(Y,Z)\lt 0.$ A negative maximum requires both $Y$ and $Z$ to be negative. Yet, because $XYZ\ge 0,$ $X$ must be positive.

Since the unconditional distribution of $X$ is Normal, it has positive chances of being negative. Consequently, conditioning $X$ on the event $Y\lt 0, Z \lt 0$ has changed the probability distribution of $X.$ Since the conditioning event does not have zero probability $$\Pr(\max(Y,Z)\lt 0)=\Pr(Y\lt 0,Z\lt 0)=\Pr(Y\lt 0)\Pr(Z\lt 0) = \left(\frac{1}{2}\right) \left(\frac{1}{2}\right) = \frac{1}{4},$$ it demonstrates a lack of independence between $X$ and $\max(Y,Z),$ QED.

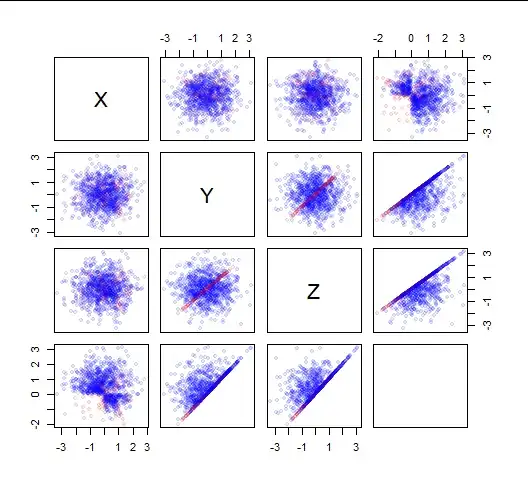

Here is a scatterplot matrix of one thousand draws from $(X,Y,Z,\max(Y,Z)).$ The circular clouds indicate independent pairs, while the distorted clouds demonstrate lack of independence.

We are not quite done. The question asks about the case where $(Y,Z)$ is not independent, whereas in this example $(Y,Z)$ is independent. We can fix that by using a mixture of the previous example and another one. Consider, for instance, the distribution of $(X_1, Y_1, Y_1)$ where $(X_1,Y_1)$ is standard Normal. A mixture of this "contaminating distribution" with the previous one does the trick.

Rather than dwelling on the mathematical details, let me just show you a couple thousand observations from this mixed distribution which is 90% the original example (blue dots) and 10% of the contaminating distribution (red dots).

The top row has the key characteristics of the previous example--especially an obvious lack of independence between $X$ and $\max(Y,Z)$ (the correlation coefficient is around $-0.19,$ far from zero), but now the $Y,Z$ scatterplots show lines of red dots, demonstrating the lack of independence of $(Y,Z).$

- 281,159

- 54

- 637

- 1,101

-

1+1 The joint density of pairwise independent but not mutually independent standard normal random variables is stated explicitly in the latter half of [this answer](https://stats.stackexchange.com/a/180727/6633) from 6 years ago. The answer also includes a sketch of the proof of pairwise independence. Also, a minor typo: in your third paragraph, $(X,Y), (X,Z), (Y,Z)$ all have standard _normal_ distributions, not standard_binomial_ distributions. – Dilip Sarwate Dec 02 '21 at 22:26

-

Thanks, @Dilip: I knew that answer was around here somewhere! I have corrected "binomial" to the intended "binormal" and linked to your answer. – whuber Dec 02 '21 at 23:02