I'm a photographer working on a personal project where I experimentally test dating photo advice. I take photos of people under different conditions, changing one variable while keeping others constant. So for example I might take a control photo at 85mm focal length, f/1.4 aperture and no smile. Then I start taking photos for different experimental conditions: change the camera's aperture size, or focal length, or ask the person to smile. I then upload photos to a Photofeeler: a voting site where users of opposite gender give attractiveness ratings to photos.

These ratings get weighted to account for each voter's voting style and transformed into an attractiveness score from 0 to 10, which as I understand corresponds to a photo's percentile of attractiveness.

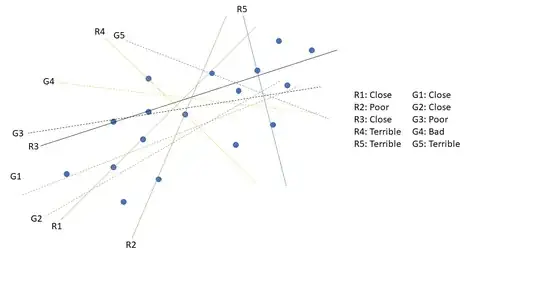

So in the end my data looks like this (made-up data)

How should I analyze:

- Whether the experimental condition has an effect or not?

- How strong are the effects compared to each other?

Some concerns I have:

- Same subjects are tested repeatedly. Would it be a good idea to use a paired t-test here using a control score column and one of the experimental columns?

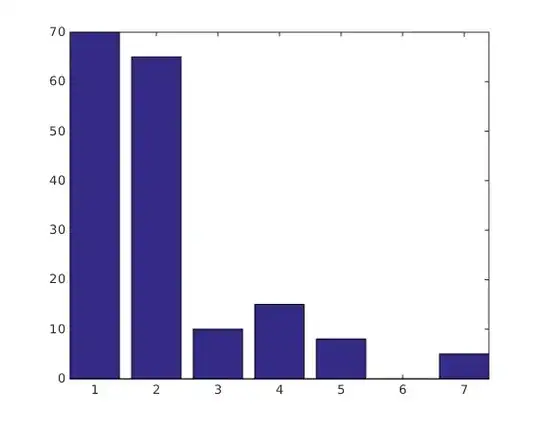

- T-test has an assumption of normally distributed data. However this doesn't seem to apply to photofeeler ratings - the percentiles are uniformly distributed, as there are the same amount of photos between 5.0 and 6.0 as between 8.5 and 9.5. How should I correct for that?

- The attractiveness score is bounded between 0 and 10. How does it affect the analysis? Intuitively it seems like a smile could raise a 5.0 photo to a 6.0, but 9.5 photo could only go to 9.6. How do I account for this nonlinearity? Would transforming the scores to logit scores ln(score/(10-score)) help?

- Which measure can I use when reporting effect size of various conditions? Mean score difference is deceptive because of the above nonlinearity. Mean logit value difference is unintuitive and hard to understand.

My goal here is to make an excel sheet in which I can paste the scores and it would spit out p-value and some effect size measure. I then plan to post results on my blog and maybe make a youtube video. Any help would be appreciated and properly attributed.