The short version is that I would like to know what the confusion matrices (numbers of true positives, false positives, true negatives, and false negatives) should be to achieve conditional use accuracy equality among two communities, one with 40% real positives and one with 60% real positives.

Here is the long version...

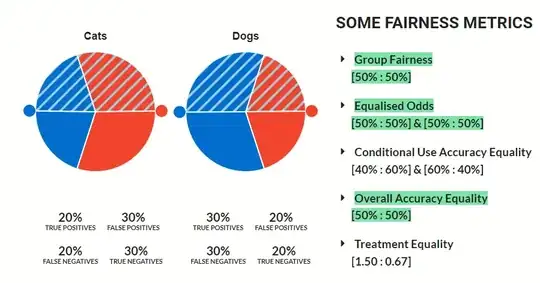

I am trying to understand the difference fairness metrics described in Understanding Fairness. It contains an interactive pair of pie charts representing two communities' confusion matrices. Blue portions represent real positives (RP); striped portions represent predicted positives. To the right of the charts are different fairness metrics and how well the proportions in the pie charts satisfy them.

Here is the original configuration:

The small red and blue circles are handles for adjusting the sizes of regions.

As shown in green, these fairness criteria are achieved:

- Group fairness

- Equalized Odds

- Overall Accuracy Equality

I have been unable to come up with adjustments that satisfy Conditional Use Accuracy Equality. As described in the document, the two communities should have the same:

- positive predictive value (PPV) or precision, i.e. TP / Predicted Positive, and

- negative predictive value (NPV), i.e. TN / Predicted Negatives

Can Conditional Use Accuracy Equality be obtained in this scenario?