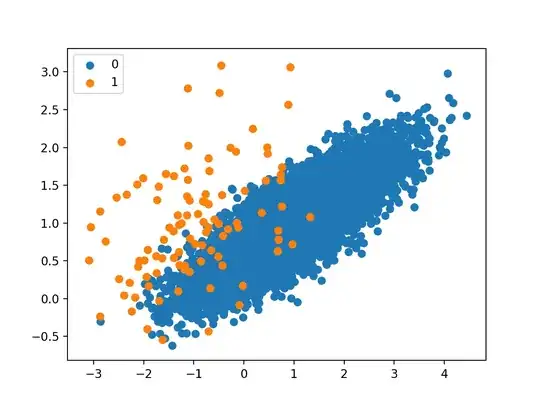

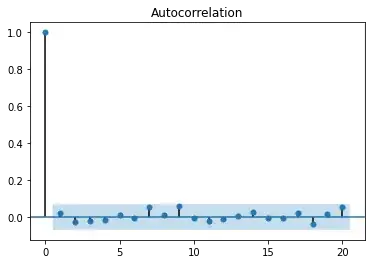

More of a curiosity, but I'm currently learning how to deal with imbalanced datasets and came across the SMOTE method to bias the minority class. The images below show before and after SMOTE was applied to the minority class (images taken from here: https://machinelearningmastery.com/smote-oversampling-for-imbalanced-classification/).

The idea seems to be that the algorithm tries to fill in the area/volume that the data occupies, but the way the algorithm works, it can only fill between the existing points.

My question is, would it be at all beneficial to oversample in an iterative manner using SMOTE? Where the subsequent iterations are applied to the original data and generated data, which would allow the algorithm to fill between purely generated data. Would this lead to strange unwanted biases? Or would it simply have no effect over the usual way to apply SMOTE?