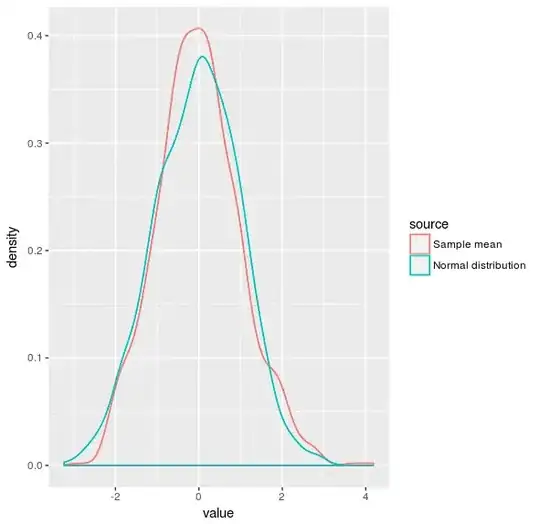

I've been experimenting with the effect that different values of k have on the generalisation error of kNN classifier, and I've gotten some unexpected results towards the end when k approaches the size of the dataset.

The result that I get is the following:

How experiment was run:

The dataset is based on sklearn's make_circles method, set up with the following parameters n_samples=500, factor=0.5 and noise=0.3.

The generalisation error was calculated as follows:

For each k in k = np.linspace(1, train_size - 1, 100)

{

generate data

`train_test_split` with `test_size=0.2`

fit model

predict model

calculate error

} repeat 100 times and get average error

My interpretation:

For k up 150 I'm happy with the results. For low values of k, the total error is dominated by variance, for higher values of k, the total error is dominated by bias. So we get the classic u-shaped plot.

As k gets larger, the error rate converges to 50%. This also makes sense, since as you increase the value of k, you start considering a large enough portion of your dataset such that your predictor will just give you the class that appears more frequently in the training set. Since the classes are split equally, and it's a binary classification problem, we expect our model to get the right answer 50% of the time.

What I don't understand is why there seems to be an increase in the error rate for values of k> 370. I would have expected it to stay as is, getting right 50% of the time? Does anyone know why this would be the case?

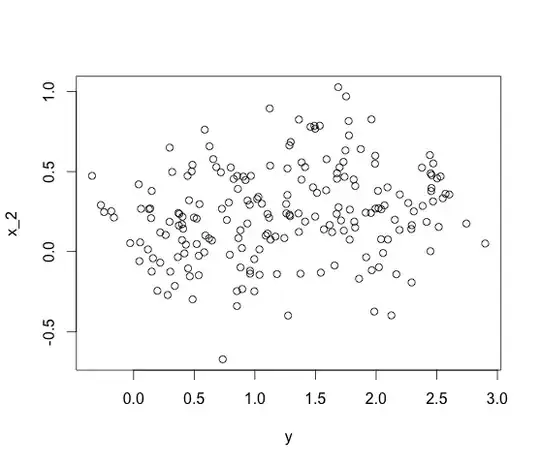

I've tried running the experiment multiple times, and have also looked at the range form 370 to 399 more closely. Here is a plot: