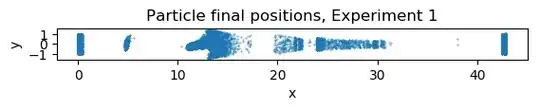

I have an experiment that shoots particles at a wall. It shoots some parts of the wall with a higher probability than others. I can record where the particles land. I need to know the underlying probability density functions (PDFs) (more precisely I need to know the peaks of the PDFs). The typical sort of data I am dealing with is given at the bottom of this post.

I have read that Kernel Density Estimation (KDE) is the best way to do this. However, I am struggling to find a python package that provides all my requirements. You can see from the attached graphs that the data can be highly skewed, i.e. the x-scale can be very different from the y-scale. Therefore, I thought it was important to have a D class kernel. This KDEMultivariate function is the only public python routine I am aware of which uses a D class kernel. However, they don't provide an option to weigh the data. I would like to weigh the data with the energy of the particles.

Do you know any good routines for my problem? Do you have any advice to help me estimate a probability density function for the data below which is as accurate as possible? I am most familiar with python.

Edit - Here is my response to some of the comments:

I need to weigh by energy to calculate the energy flux density. Here we are looking at approximately $10^5$ particles. I will then use this to infer the flux density when $10^{20}$ particles are fired. This will allow me to work out if the walls can handle the load. Hence, I only need to know the peak of the probability density function.

The D-class kernel is explained in this Wikipedia article. It basically means the bandwidth in the x-direction is different to the y-direction.

I need the PDE to be as accurate as possible especially around the peak. If you think a simple Gaussian kernel will be fine then I guess I will do that.