Context: I have a dataset containing instances labeled into different classes, and for all the classes, I have the same set of features. My research question is to identify classes that are more similar to each other.

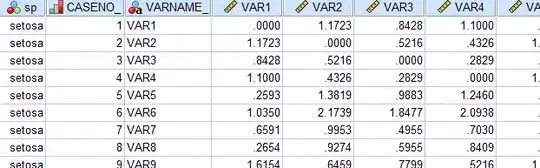

My initial thought was to compare these classes by estimating the pairwise similarity. And, by pairwise similarity, I mean the similarity matrix between all the classes considered. As bellow:

Similarity matrix for classes A, B, C, D:

A B C D

A 1.0, 0.3, 0.7, 0.8

B 0.3, 1.0, 0.2, 0.4

C 0.7, 0.2, 1.0, 0.9

D 0.8, 0.4, 0.9, 1.0

Example:

For simplicity, let's consider the iris dataset. And my goal is to find if iris setosa is more similar to iris virginica or to iris versicolor.

I want to compute the similarity for each possible pair (a,b) for a,b in (setosa, virginica, and versicolor).

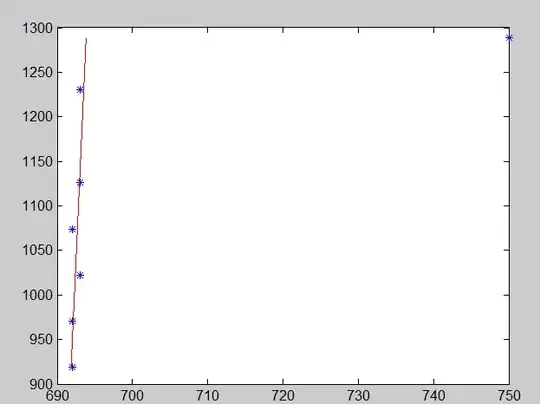

Assume that I have standardized all the features between 0 and 1 universally. Only after standardizing, I separated the iris labeled instances into 3 subsets (X_setosa, X_virginica, X_versicolor), according to their classes. Then, I have generated 3 PCs (PC_setosa, PC_virginica, and PC_versicolor), one for each set s as bellow:

pca_s = PCA(n_components=2)

pca.fit_transform(X_s)

PC_s = pca_s.components_

My questions are:

- Does that idea of comparing the PCs (eigenvectors) as a proxy for classes similarity make sense?

- How could I compare the PCs structures using the cosine similarity? After some googling, I don't know if its better to compare the loadings or the eigenvectors (PCs).