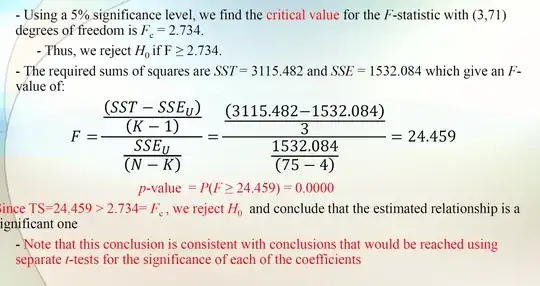

The gist is that the $F$-test is asking if one model is better than another. If that is the case, then you seem “significant” the parameters in the larger model but not in the smaller model. If you do a two-sided test, you are considering that the larger model is worse than the smaller model, which makes no sense from the standpoint of trying to show that a predictor impacts the response variable.

In a bit more detail, the $F$-test compares two models on squared differences between predicted values and observed values (square loss), mathematically expressed as $(y_i - \hat y_i)^2$. The models are nested, meaning that the larger one has all of the parameters that the smaller one has, plus some extra ones (maybe just one, maybe a bunch). The test then asks if the reduction square loss is enough to convince us that the additional parameters are not zero (reject the null hypothesis). If those parameters are not zero, then the variables to which they correspond can be considered contributors to the values of $y$.

This is why we only care about the one-sided test. If the evidence is that the larger model provides a worse fit than the smaller model, then that does not help us show that the additional parameters are non-zero.

EDIT

A low $F$-stat does suggest a difference in variance, just not in a way that is useful to testing in regression. It suggests that the variance of the error term in the larger model is greater than the variance of the error term in the smaller model;$^{\dagger}$ this is not compelling evidence that a feature influences $y$ and, therefore, is "significant". If you use an $F$-test to compare the variances of two distributions, instead of comparing their means (such as a $t$-test), then you might care if cats have a lower variance than dogs just as much as if dogs have a lower variance than cats.

$^{\dagger}$This is a bizarre occurrence. Larger models have smaller (or at least equal) error term variances than smaller (nested) model error term variances, except under specific circumstances. The only time I have heard of this happening is when there is extreme multicollinearity, such as including polynomial terms that wind up being highly multicollinear.