Let $X$ be a binomial RV with parameters $(n,p)$. I am interested in the ratio given by $\hspace{5cm}\boxed{R=\frac{var[f(X)]}{\mu[f(X)](1-\mu[f(X)])}}$

where $\mu[f(X)]$ denotes the mean of $f(X)$.

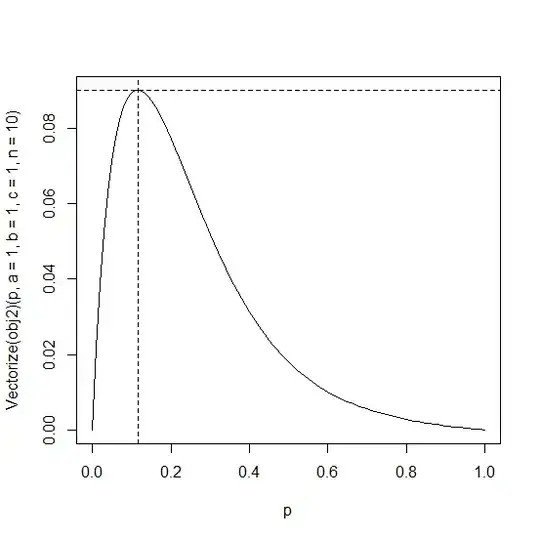

In my case, $f(X)$ is $e^{-\left(\frac{a}{bX+c}\right)}$. The constants $a,b,c$ can take values such that $0 \lt a,b,c \leq 10$.

My aim is to find the value of $p$ that maximize $R$ in terms of $a,b,c$ and $n$. Can someone suggest a simplification to $R$ so that the optimal value for the parameter $p$ can be computed analytically?

My attempt: I was unable to exactly compute the mean and variance of $f(X)$ due to the non-linear form of $f(X)=e^{-\left(\frac{a}{bX+c}\right)}$ . It is not trivial how to take derivatives of $\mu[f(X)]$ and $var[f(X)] $ to optimize for $R$ with this non-linear $f(X)$.. Hence, I thought of using an approximation based on the Taylor series which is known as the delta method) See this and this for reference.)

The approximation to mean $\mathrm{E}[f(X)] \approx f\left(\mu_{X}\right)+\frac{f^{\prime \prime}\left(\mu_{X}\right)}{2} \sigma_{X}^{2}$ worked well when I tried numerically.

However, the approximation for variance given by $Var[f(X)] \approx f^{\prime}(\mu)^{2} \cdot \sigma^{2}+\frac{f^{\prime \prime}(\mu)^{2}}{2} \cdot \sigma^{4}$ seems to be very off from the original values in my numerical simulations. As suggested by jbowman, the variance approximation I am using seems to be of centered-Gaussian. The better approximation seems to me very complicated since I finally want to optimize $p$ to maximize $R$.

It would be great if indeed someone can come up with an expression for the optimal $p$ in terms of $a,b,c,n$ approximately.

Other than the general case, I am also interested in the following scenarios:

- small values of $n$, large values of $n$

- $\frac{b}{c}>>1$

- $\frac{b}{c}<<1$

Please feel free to write an answer even if you can only solve these special cases.