Yes, polynomials are also problematic in interpolation, because of overfitting and high variability.

Here is an example. Assume your dependent variable $y$ is uniformly distributed on the interval $[0,1]$. You also have a "predictor" variable $x$, also uniformly distributed on the interval $[0,1]$. However, there is no relationship between the two. Thus, any regression of $y$ on any power of $x$ will be overfitting.

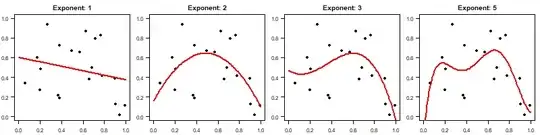

Now, assume we draw 20 data points from this data generating process and fit $y\sim x^n$ for $n=1, 2, 3, 5$. Here are the fits:

As you see, the fit gets "wigglier" for higher $n$.

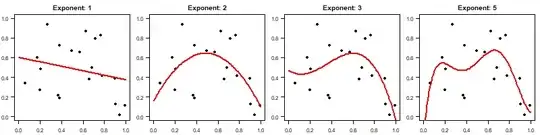

However, one key problem is that (of course) the fit will depend on the data we have randomly sampled from our data generating process. After all, we could have drawn 20 quite different pairs $(x,y)$. Let's repeat the exercise another three times, with new random samples each time. Below, the top row is the same as the previous plot, and the three other rows are just the fits based on new samples:

The main problem is visible when you compare the left column (linear fits) and the right column ($x^5$ fits): the fit for a lower order polynomial is much less variable and dependent on the randomness in our data sampling than the fit for the high order polynomial. If we want to interpolate $y$ for some $x$ even somewhere in the middle of the interval, using a higher order polynomial will yield a fit that is much more wobbly than a lower order polynomial.

R code:

nn <- 20

xx <- seq(0,1,by=.01)

png("sims_1.png",width=800,height=200)

opar <- par(mfrow=c(1,4),mai=c(.3,.3,.3,.1),las=1)

set.seed(1)

obs <- data.frame(x=runif(nn),y=runif(nn))

for ( exponent in c(1,2,3,5) ) {

model <- lm(y~poly(x,exponent),obs)

plot(obs$x,obs$y,pch=19,xlab="",ylab="",main=paste("Exponent:",exponent),xlim=c(0,1),ylim=c(0,1))

lines(xx,predict(model,newdata=data.frame(x=xx)),col="red",lwd=2)

}

dev.off()

png("sims_2.png",width=800,height=800)

opar <- par(mfrow=c(4,4),mai=c(.3,.3,.3,.1),las=1)

for ( jj in 1:4 ) {

set.seed(jj)

obs <- data.frame(x=runif(nn),y=runif(nn))

for ( exponent in c(1,2,3,5) ) {

model <- lm(y~poly(x,exponent),obs)

plot(obs$x,obs$y,pch=19,xlab="",ylab="",main=paste("Exponent:",exponent),xlim=c(0,1),ylim=c(0,1))

lines(xx,predict(model,newdata=data.frame(x=xx)),col="red",lwd=2)

}

}

par(opar)

dev.off()