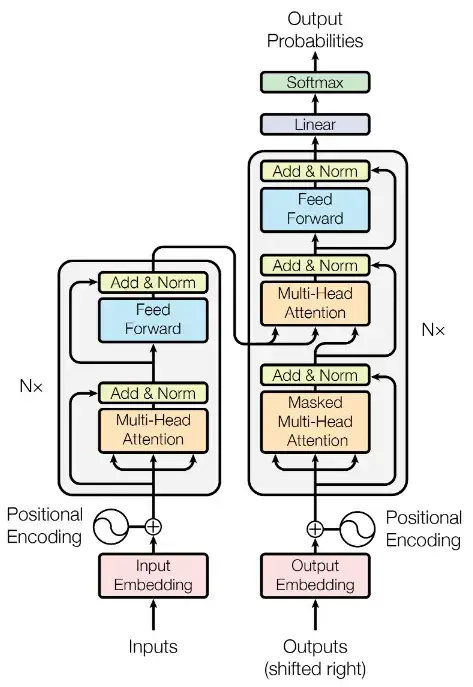

According to the article Attention Is All You Need, each sublayer in each one of the encoder and decoder layers is wrapped by a residual connection followed by layer normalization ("Add & Norm" in the following figure):

I more or less understand how the "Add & Norm" layers work, but what I don't understand is their purpose. Why are residual connections and layer normalization needed? What do they exactly do? Do they improve the model performances, and if so - how?