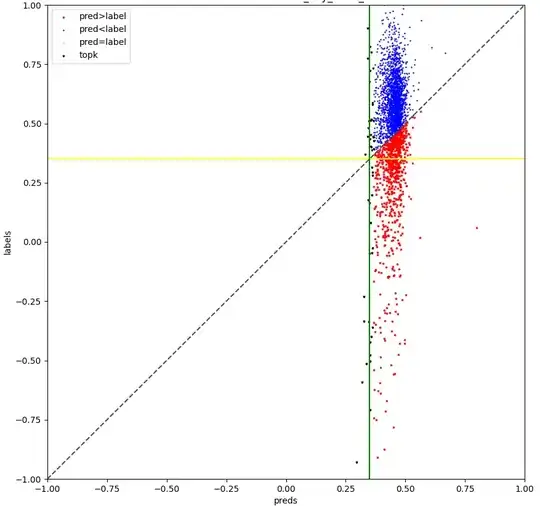

I'm using LightGBM for a regression task. My training data's shape is (2000000, 1600), which means the number of training data is 2 million +, and each sample has 1600 features. The figure below is the scatter plot of my trained model's predictions on part of training data (about 3000 samples). However, the performance is not very satisfying, cause the ground-truth values range from -1 to 1, but the predicted values have a very limited range. Generally speaking, this phenomenon indicates that the model is underfitting. How could I tune the parameters to solve this problem? Or what should I do to figure out what happens here? Any suggestions would be helpful.

Here is my parameters:

params = {

'metric': 'custom',

'objective': 'regression',

'boosting_type': 'gbdt',

'first_metric_only': True,

'task': 'train',

'verbose': 1,

'bagging_fraction': 0.7,

'bagging_freq': 20,

'feature_fraction': 0.8,

'lambda_l1': 0,

'lambda_l2': 0,

'learning_rate': 0.01,

'max_depth': 7,

'num_leaves': 128,

'min_child_sample':10000,

'num_boost_round': 1000

}