I have a repeated measures experiment where all participants completed several trials for each condition. My dependent variables are response time and accuracy. I am using the Interquartile Range as my outlier removal criteria. Before I ask my questions, here are a few details on the IQR:

The IQR represents the central 50 percent or the area between the 75th and 25th percentile of a distribution. Any point is an outlier if it is above the 75th percentile or below the 25th percentile by a factor of 1.5 times the IQR.

Here, we can describe our dataset with:

- Minimum (lowest) value of the data

- Quartile 1 = Q1 = first quartile = 25% of the data starting from the minimum value

- Quartile 2 = median = Q2 = midpoint of the dataset

- Quartile 3 = Q3 = 75% of the data starting from the minimum value

- Maximum value of the data set

The Interquartile Range = IQR = Q3 – Q1 = how the data are spread about the median. We then use the IQR to find the lower and upper bounds of our exclusion criteria:

- lower: [Q1- (1.5)*IQR]

- upper: [Q3+(1.5)*IQR]

Note: the 1.5 is a scale value. When you do the math, we can see that anything beyond 2.7 sigmas from the mean would be considered an outlier and this is closest to the Gaussian Distribution where an outlier is is any value beyond 3 sigmas of either side of the mean.

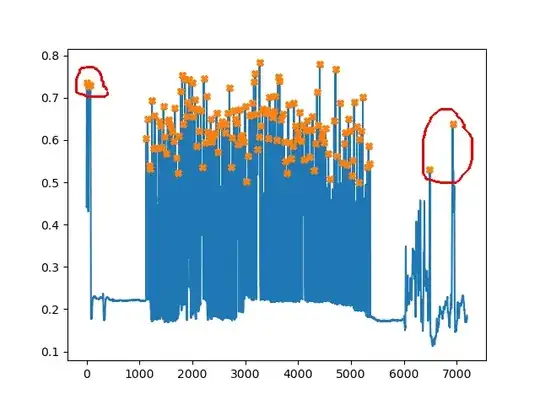

Here is a Wikipedia image (https://en.wikipedia.org/wiki/Interquartile_range):

My Questions:

Is it valid to remove trials as outliers using the IQR method? Is it more valid to (1) find the IQR of all subjects and then remove these trials or (2) to find the IQR of each subject and then remove each subject's respective outlier trials?

It seems more valid to remove trials rather than all of a single participants data because they may only have a few trials (of the many) that represent inattention (e.g., spaced out momentarily). And, these few trials might throw off their overall response time (or accuracy) measure.

Thanks for the input.