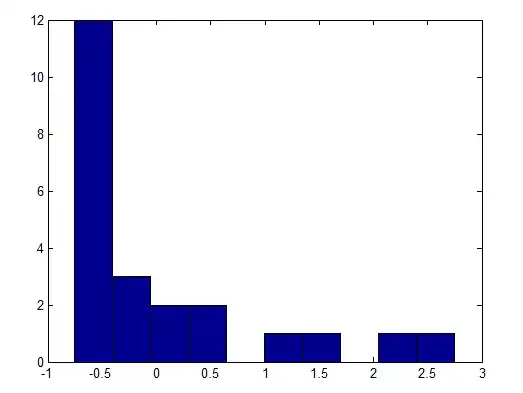

I recorded the length of 197 nursing care episodes. My normalised data $(x-\mu)/\sigma$ looks like this:

Kolmogorov-Smirnov gives me a p-value of 0.14. Can't be right surely?! Am I misinterpreting my results?

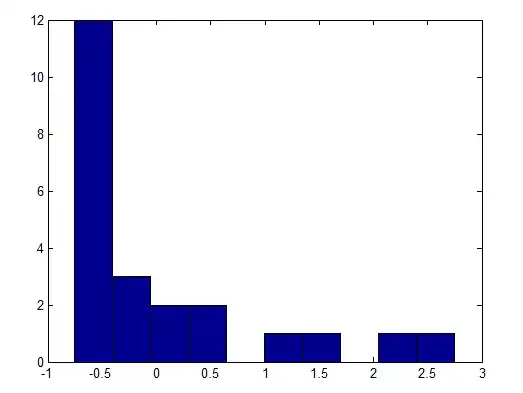

I recorded the length of 197 nursing care episodes. My normalised data $(x-\mu)/\sigma$ looks like this:

Kolmogorov-Smirnov gives me a p-value of 0.14. Can't be right surely?! Am I misinterpreting my results?

I think your issues are now clarified enough to construct a decent answer (with plenty of links explaining the issues).

There are several issues here:

1. K-S test with estimated parameters

The sample mean and s.d. ($\bar{x}$ and $s$ ) are not population parameters ($\mu$ and $\sigma$).

The calculation of the null-distribution (/critical values) of the Kolmogorov-Smirnov test is based on a fully specified distribution, not an estimated one -- the p-values aren't meaningful if you use parameter estimates. In particular, the p-values will tend to be larger than what you'd get if the conditions under which the test was derived held.

When you want to estimate parameters the Kolmogorov-Smirnov-type of test is called a Lilliefors test, which has different tables.

So the Lilliefors is the correct test to use if you want to do a K-S -style for normality but with estimated mean and standard deviation. It's not necessary to get the original paper to use this test - you can simulate the null distribution (and to substantially better accuracy than Lilliefors was able to do in the 1960s).

Though if testing normality is the aim, the Shapiro-Wilk or Shapiro-Francia tests are more typical and have better power; Anderson-Darling tests are also common (parameter estimation is an issue for the A-D test as well, but check the discussion of the issue in D'Agostino & Stephens' Goodness of Fit Techniques).

Also see How to test whether a sample of data fits the family of Gamma distribution?

However, having identified the issue with your p-value doesn't mean that a goodness-of-fit test addresses your original issue.

2. Using hypothesis testing of normality for procedures that assume it

You carry the idea that the appropriate action when dealing with an ANOVA-like situation is to formally test normality via some goodness of fit test and only on rejection consider a nonparametric test. I would say this is not generally an appropriate understanding.

First, the hypothesis test answers the wrong question; indeed a rejection gives an answer to a question you already know the answer to.

Is normality testing 'essentially useless'?

What tests do I use to confirm that residuals are normally distributed?

Is it reasonable to make some assessment of normality if one is considering using a procedure that relies on it? Certainly; a visual assessment - a diagnostic such as a Q-Q plot - shows you how non-normal your data appear and will let you see whether the extent and type of non-normality you have would be enough to make your concerned about the particular procedure you would be applying.

In this case your histogram would be enough to say 'don't assume that's normal', though ordinarily I wouldn't base such a decision only on a histogram

Secondly, you can just do a Kruskal-Wallis without testing normality. It's valid when the data are normal, it's just somewhat less powerful than the usual ANOVA.

Only the fact that ANOVA is reasonably robust to mild non-normality makes it a reasonable choice in many circumstances. If I anticipated more than moderate skewness or kurtosis I'd avoid assuming normality (though Kruskal-Wallis is not the only option there).

Khan and Rayner (2003),

Robustness to Non-Normality of Common Tests for the Many-Sample Location Problem,

Journal of Applied Mathematics and Decision Sciences, 7(4), 187-206

suggest that in situations of high kurtosis - when sample sizes are not very small - that the Kruskal Wallis is definitely preferred to the F-test* (when sample sizes are small they suggest avoiding the Kruskal-Wallis)

*(the comments apply to the Mann-Whitney vs t test when there are two samples)

You certainly don't need to show something isn't normal to apply Kruskal-Wallis.

There are other alternatives to the Kruskal Wallis that don't assume normality, such as resampling-based tests (randomization tests, bootstrap tests) and robustified versions of ANOVA-type tests.

Also see:

How robust is ANOVA when group sizes are unequal and residuals are not normally distributed?

3. Assumptions of ANOVA

ANOVA doesn't assume the entire set of numbers is normal. That is, unconditional normality is not an assumption of ANOVA - only conditional normality.

Which is to say, you can't really assess the ANOVA assumption on the original data; you assess it on the residuals.

https://stats.stackexchange.com/a/6351/805

https://stats.stackexchange.com/a/27611/805

Also:

https://stats.stackexchange.com/a/9575/805 (t-tests, a special case of ANOVA)

https://stats.stackexchange.com/a/12266/805 (regression, a generalization of ANOVA)