Yes

Provided, that the bias is decreasing the variance of the error.

In that case, since the bias reduces the variance of the error, which can be decomposed into contributions from bias and variance, the variance must be decreasing.

$$\text{var}(error) = bias(estimator)^2 + \text{var}(estimator)$$

So if you reduce $\text{var}(error)$ while increasing $bias(estimator)^2$ then necessarily $\text{var}(estimator)$ must decrease.

This means that the only sensible types of bias (sensible meaning that it reduces the error) are the ones that reduce the variance of the estimator. As explained below this is not true for every type of biased estimator.

No

However, if one uses some silly estimator with enormous variance and that has also bias, then sure a biased estimator can have more variance.

Example, related question

This is related to the question

Why exactly $E[(\hat{\theta}_n - E[\hat{\theta}_n])^2]$ and $E[\hat{\theta}_n - \theta]$ cannot be decreased simultaneously?

Seen here: Bias / variance tradeoff math

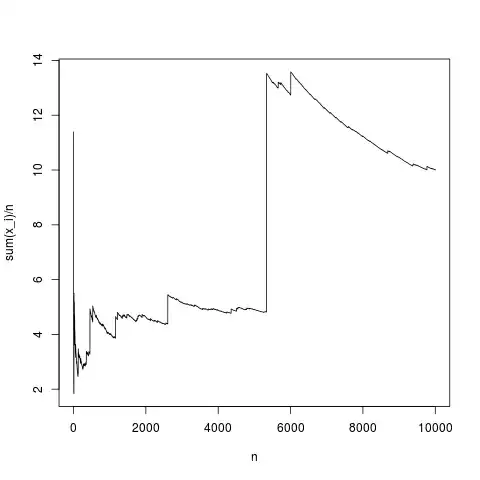

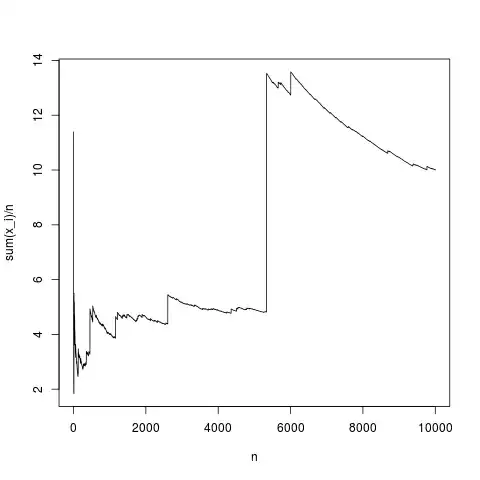

In an answer to that question we see the following graph for a shrinking estimator.

We can estimate the mean of some population $\mu$ by the sample mean $\bar{x}$ and then multiply it with some scaling factor $c\bar{x}$.

In the graph on the right you see what happens with variance and bias when the shrinking (or inflating) parameter is changed. The unbiased estimator is in the middle ($c=1$). You can add bias by multiplying with a factor below one ($c<1$to the left of the graph) or with a factor above one ($c>1$to the right of the graph).

You can see that multiplying with a factor above one is not decreasing the variance of the estimator (obviously since the variance of the estimator scales with $c^2$).

But, this is also not a type of bias that decreases the variance of the error, and it is not a type of bias that is typically considered in a bias-variance trade-off.