First time to ask a question. I am now reading a textbook "Mathematical Statistics and Data Analysis, 3ed" by Rice. On Page 495, there is a Theorem B for the two-way layout ANOVA.

Let me first give some background. There are $I$ levels for one factor, $J$ levels for another factor. And $K$ independent observations for each of $I \times J$ cell. The statistical model for an observation in a cell is $$ Y_{ijk} = \mu + \alpha_i + \beta_j + \delta_{ij} + \epsilon_{ijk}, $$ with the constraints, $$ \sum_{i} \alpha_i = \sum_{j} \beta_j = \sum_{i} \delta_{ij} = \sum_{j} \delta_{ij} = 0. $$ $\mu$ is the grand average, $\alpha_i$ is $i^{th}$ differential effect of one factor, $\beta_j$ is $j^{th}$ differential effect of another factor, $\delta_{ij}$ is the interaction between $i^{th}$ and $j^{th}$ factor. The error term is normally distributed with mean zero and same variances $\sigma^2$.

We know that

$$

SS_{TOT} = SS_A + SS_B + SS_{AB} + SS_E,

$$

where

\begin{align*}

&SS_A = JK \sum_{i=1}^{I} (\bar{Y}_{i..} - \bar{Y}_{...})^2 \\

&SS_B = IK \sum_{j=1}^{J} (\bar{Y}_{.j.} - \bar{Y}_{...})^2 \\

&SS_{AB} = K \sum_{i=1}^{I} \sum_{j=1}^{J}

(\bar{Y}_{ij.} - \bar{Y}_{i..} - \bar{Y}_{.j.} + \bar{Y}_{...})^2 \\

&SS_E = \sum_{i=1}^{I} \sum_{j=1}^{J} \sum_{k=1}^{K}

(Y_{ijk} - \bar{Y}_{ij.})^2 \\

&SS_{TOT} = \sum_{i=1}^{I} \sum_{j=1}^{J} \sum_{k=1}^{K}

(Y_{ijk} - \bar{Y}_{...})^2

\end{align*}

Also, we know that

\begin{align*}

&E[SS_A] = (I-1)\sigma^2 + JK \sum_{i=1}^{I} \alpha_i^2 \\

&E[SS_B] = (J-1)\sigma^2 + IK \sum_{j=1}^{J} \beta_j^2 \\

&E[SS_{AB}] = (I-1)(J-1) \sigma^2

+ K \sum_{i=1}^{I} \sum_{j=1}^{J} \delta_{ij}^2 \\

&E[SS_E] = IJ(K-1) \sigma^2

\end{align*}

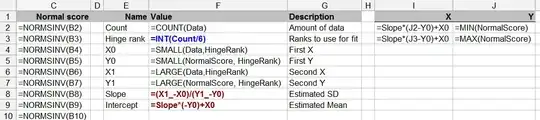

The following image is the aforesaid Theorem B on which I have questions,

Proofs on a,b and c are straightforward and my question is on the proofs on d and e,

- How to prove d? (I try to do some algebra and want to relate $\bar{Y}_{ij.}$ to $\bar{Y}_{i..}$ and $\bar{Y}_{.j.}$, but stuck).

- For e, since $SS_{A}$ and $SS_{B}$ could be both considered as functions of $\bar{Y}_{ij.}$, then it is easy to prove that $SS_{A}$ and $SS_{B}$ are both independent of $SS_{E}$, which allows us to implement F test. But $SS_{A}$ and $SS_{B}$ seems to be dependent, which contradicts with e. (or the author's meaning is just the dependence between $SS_{E}$ and others, but not the dependences among others.)