TLDR: My 1D CNN is doing a really bad job classifying graphs. Here's more context:

Note: I've tried adopting the advice listed here and here, but my CNN hasn't stopped overfitting. I've already tried many of the advice listed there, including unit testing, changing the architecture, etc. In fact, the CNN works just fine with two classes, but for some reason, three classes makes it fail.

What am I trying to do?

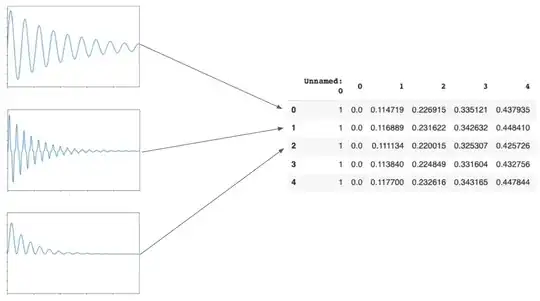

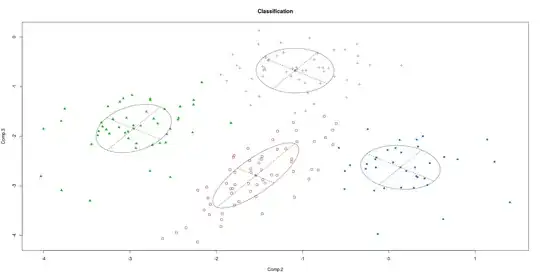

My Time-Series is a 90 x 100 table representing points from three different types of graphs: Linear, Quadratic, and Cubic Sinusoidal. Thus, there are 30 Rows for Linear Graphs, 30 for Quadratics, and 30 for Cubics. I have sampled 100 points from every graph. Here’s an image to illustrate my point:

Here's the problem

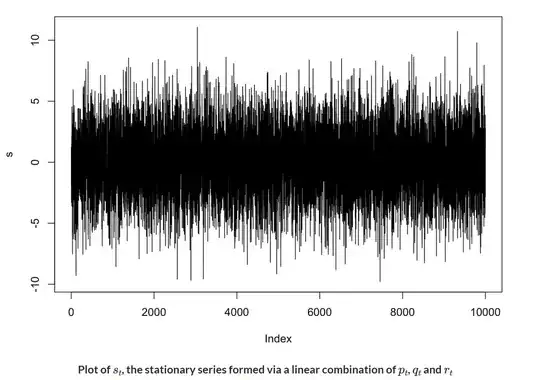

I’m very confused why my 1D FCN is performing so badly. I’ve been debugging it for the last two weeks – changing the training set, changing the # classes, changing the architecture … nothing works. Here's a quick overview:

- Confusion Matrix shows only 42% validation accuracy.

- Loss Function also looks disappointing – it seems to be overfitting after only 10 epochs (I also tried 100 and 1000 epochs – same results)

- Probability Graph shows that the Network is extremely confident about the Quadratic Class, but very confused about the Linear and Cubic graphs.

Here's how I tried to fix it

Here are some of the strategies I used for debugging (none worked):

- Make the problem simpler by giving the FCN only two classes to classify. At one point, the network actually reached 100% validation accuracy after 5 epochs -- I tried the exact same network on the three classes and it failed catastrophically

- Changed # Epochs (increased and decreased it, trying everything from 10 to 1000 epochs)

- I decreased my training set from 30k to 3k to 300 all the down way to 60. However, this has not changed the problem.

Code

Here is the code: 1D CNN Time Series Classifier. Below's a quick MWE if you need it. I'm using a library based on a library based on PyTorch, tsai (time-series AI).

url="https://barisciencelab.tech/Training3.csv"

c=pd.read_csv(url)

c.head()

X, y = df2xy(c, data_cols=c.columns[1:-1], target_col='Class')

test_eq(X.shape, (3000, 1, 100))

test_eq(y.shape, (3000, ))

splits = get_splits(y, valid_size=.2, stratify=True, random_state=23, shuffle=True)

class_map = {

1:'Linear',

2:'Quadratic',

3:'Cubic',

}

class_map

labeler = ReLabeler(class_map)

new_y = labeler(y) # map to more descriptive labels

X.shape, new_y.shape, splits, new_y

tfms = [None, TSClassification()] # TSClassification == Categorize

batch_tfms = TSStandardize()

dls = get_ts_dls(X, new_y, splits=splits, tfms=tfms, batch_tfms=batch_tfms, bs=[64, 128])

dls.dataset

learn = ts_learner(dls, FCN, metrics=accuracy, cbs=ShowGraph())

learn.fit_one_cycle(30, lr_max=1e-4)

I would be very grateful if anyone could help me resolve this issue.