I understand the use of evaluation set for parameter tuning and over-fitting in general. The examples in the evaluation set should be unseen and different from training set.

However, in the following toy CatBoost regression problem, in which I choose to set the evaluation to be identical with the training set, I do not understand why the evaluation error calculated deviate from the training error over iterations?

from catboost import CatBoostRegressor, Pool

cat_features = [0,]

train_data = [["b", 1,],

["b", 5,],

["e", 3,],

["c", 3,],

["d", 4,]]

train_labels = [1.,3.51, 0.43,0.45,0.55]

train_data = Pool(train_data, train_labels, cat_features=cat_features)

model = CatBoostRegressor(iterations=100, use_best_model=True, random_seed=1234)

model.fit(train_data, eval_set=train_data, verbose=True, plot=True)

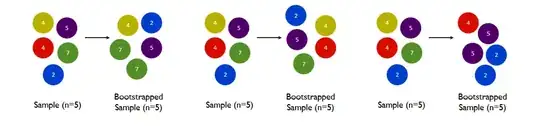

The error rate for evaluation error (solid line) and training error (dotted line) over iterations are shown as follows:

On the other hand, if I choose to have change the only categorical feature to be a numerical feature (replacing a=1, b=2, c=3 and so on) as follows, the issue disappears, i.e., the evaluation error matches with the training error over all the iterations.

On the other hand, if I choose to have change the only categorical feature to be a numerical feature (replacing a=1, b=2, c=3 and so on) as follows, the issue disappears, i.e., the evaluation error matches with the training error over all the iterations.

cat_features = []

train_data = [[2, 1,],

[2, 5,],

[5, 3,],

[3, 3,],

[4, 4,]]

train_labels = [1.,3.51, 0.43,0.45,0.55]

train_data = Pool(train_data, train_labels, cat_features=cat_features)

model = CatBoostRegressor(iterations=100, use_best_model=True, random_seed=1234)

model.fit(train_data, eval_set=train_data, verbose=True, plot=True)

I do not understand. I suspect this may be due to some randomness in categorical encoding. Could someone please help?