I am utterly confused. I have been reading about the optimality of Bayes classifier and Bayesian model averaging all the time, but when I try to dig deeper, I just get more confused.

On the one hand,

I have been hearing about the Bayes Optimal Classifier:

$$ y = \arg \max_{c_j \in C} \sum_{h_i \in H} P(c_j|h_i)P(T|h_i)P(h_i)=\arg \max_{c_j \in C} \sum_{h_i \in H} P(c_j|h_i) P(h_i|T) $$

Mitchell (1997) said that "no other classification method using the same hypothesis space and same prior knowledge can outperform this method on average." It also noted that the resulting hypothesis does not have to be in H. The above-cited Wikipedia article further said, "the hypothesis represented by the Bayes optimal classifier, however, is the optimal hypothesis in ensemble space (the space of all possible ensembles consisting only of hypotheses in H)."

Clarification

Through discussions with @tim, it became clear that there appear two different definitions of Bayesian Optimal Classifier. My use of the term above is a synonym of Bayesian Model Averaging, as noted by this paper. This seems how the term Bayesian Optimal Classifier is used in the above-cited sources, too. For example, Mitchell (1997) said of Bayesian Optimal Classifier that "no other classification method using the same hypothesis space and same prior knowledge can outperform this method on average." This implies that for his definition of BOC, the hypothesis space contains only some pre-determined hypotheses and may not contain the true hypothesis.

On the other hand,

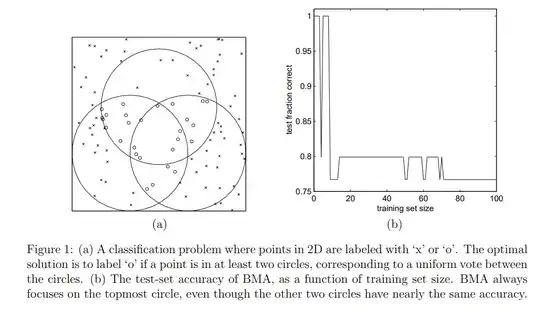

Minka (2002) gives an example where the simple average of three hypotheses outperforms Bayesian model averaging. In the below figure from the paper, the three circles represent the three hypotheses and the true class assignment is that an example is an "o" iff it is inside at least two circles.

Figure 1 (b) noted that the bigger the training set, the higher posterior probability it assigns to one of the circles (the top one), and the lower the accuracy.

His main point is that Bayesian model averaging assumes that exactly one hypothesis is responsible for all of the data and the posterior probability $P(h_i|T)$ only represents the uncertainty of which hypothesis generates the data. Even if the true hypothesis is not in $H$, as more data arrives, more and more weight will be given to the most probable hypothesis that is in H. By contrast, model combination such as bagging enriches the hypothesis space.

The view that Bayesian model averaging assumes data being generated by a single model seems to echo Bishop's Pattern recognition and machine learning.

But these points seem the opposite of what Wikipedia and Mitchell (1997) said about Bayes classifier.

Here is my question:

How do we understand the optimality of BMA/MOC? Specifically, statements like: - Mitchell (1997) page 175, "No other classification method using the same hypothesis space and same prior knowledge can outperform this method on average." - Wikipedia: "the hypothesis represented by the Bayes optimal classifier, however, is the optimal hypothesis in ensemble space (the space of all possible ensembles consisting only of hypotheses in H)?"

I have never seen proof of them. Also, the example in Minka (2002) cited above seems to have given a counterexample to both of these statements?