According to the documentation for glmer, nAGQ refers to "the number of points per axis for evaluating the adaptive Gauss-Hermite approximation to the log-likelihood". I understand the n in the acronym stands for number of points, while the AGQ stands for Adaptive Gauss-Hermite Quadrature.

Douglas Bates further explains that the difference between nAGQ=0 or nAGQ=1 "is whether the fixed-effects parameters, $\beta$, are optimized in PIRLS or in the nonlinear optimizer."

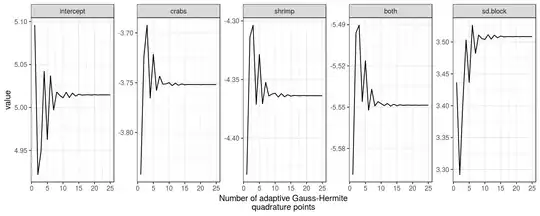

The general advice seems to be that setting nAGQ = 0 is less accurate than setting nAGQ = 1 (the default), and that where possible setting it higher is better still, although there will be a tradeoffs inasmuch as higher values of nAGQ will have longer runtimes and a higher chance of convergence failures.

I have in mind scenarios in which the person running the model intends for the results to be published in a scientific paper, and not scenarios

in which the person doesn’t care much about accuracy as opposed to runtime. Is setting nAGQ = 0 only appropriate when the model will otherwise not converge (or won't converge in sufficient time), and all other means of resolving the convergence issue have failed? What other factors make it more or less acceptable?

Robert Long has suggested elsewhere on this site that

You might get more accurate results with nAGQ>0. The higher the better. A good way to assess whether you need to is to take some samples from your dataset, run the models with nAGQ=0 and nAGQ>0 and compare the results on the smaller datasets. If you find little difference, then you have a good reason to stick with nAGQ=0 in the full dataset.

This seems a good rule of thumb, although there remains the question of what to do if the subsampled data has convergence failures when nAGQ is greater than 0.

My question is related to another question, in which I could not find any other way to make a model converge aside from setting nAGQ = 0, and usually had convergence failures on subsampled data.