I originally had a Binary CNN which was working at 98% after fixing what I think was overfitting. I then changed it to a classification CNN with 4 classes and I have had troubles ever since. I split waiting into waiting and empty. running into running and partial. These are described below.

I am using colab and made the data with a shared link so anyone should be able to run it: https://colab.research.google.com/drive/1fhzgXEsqhVR0CQeMA3PCoPIAHBQiIVxb?usp=sharing

Issue: Below is the output of the fit command. as you can see starting from epoch 1 the stats stay the same. I have changed the model removing or adding conv2D along with adding/removing dropout and maxpool. My core pictures are not great because of a light but I believe with 3 of the 4 classes having very similar photos it should be atleast 60%. I know I dont have enough photos at the moment but with it always going back to right around 33% - 34% within the first or second epoch, I feel like there is a bigger issue going on. I have gone back to reclassify my photos several times and making those 3 of 4 classes very similar hoping that might be the issue. I have one class called partial which does have photos that are more scattered.

Epoch 1/10

93/93 - 87s - loss: 6.1132 - accuracy: 0.3440 - val_loss: 2.6013 - val_accuracy: 0.0814

Epoch 2/10

93/93 - 86s - loss: 6.1132 - accuracy: 0.3440 - val_loss: 2.6013 - val_accuracy: 0.0814

Epoch 3/10

93/93 - 86s - loss: 6.1132 - accuracy: 0.3440 - val_loss: 2.6013 - val_accuracy: 0.0814

Epoch 4/10

93/93 - 87s - loss: 6.1132 - accuracy: 0.3440 - val_loss: 2.6013 - val_accuracy: 0.0814

Epoch 5/10

93/93 - 87s - loss: 6.1132 - accuracy: 0.3440 - val_loss: 2.6013 - val_accuracy: 0.0814

Epoch 6/10

93/93 - 85s - loss: 6.1132 - accuracy: 0.3440 - val_loss: 2.6013 - val_accuracy: 0.0814

Epoch 7/10

93/93 - 87s - loss: 6.1132 - accuracy: 0.3440 - val_loss: 2.6013 - val_accuracy: 0.0814

Epoch 8/10

93/93 - 86s - loss: 6.1132 - accuracy: 0.3440 - val_loss: 2.6013 - val_accuracy: 0.0814

Epoch 9/10

93/93 - 85s - loss: 6.1132 - accuracy: 0.3440 - val_loss: 2.6013 - val_accuracy: 0.0814

Epoch 10/10

93/93 - 86s - loss: 6.1132 - accuracy: 0.3440 - val_loss: 2.6013 - val_accuracy: 0.0814

model: Below is the last model I am using with Keras that gave the output above.

# Our input feature map is 150x150x3: 150x150 for the image pixels, and 3 for

# the three color channels: R, G, and B

img_input = layers.Input(shape=(model_image_width, model_image_height, 3))

# First convolution extracts 16 filters that are 3x3

# Convolution is followed by max-pooling layer with a 2x2 window

x = layers.Conv2D(16, kernel_size=(3,3), strides=(1,1), padding='same', activation='relu')(img_input)

x = layers.MaxPooling2D(pool_size=(2,2))(x)

# Second convolution extracts 32 filters that are 3x3

# Convolution is followed by max-pooling layer with a 2x2 window

x = layers.Conv2D(32, kernel_size=(3,3), strides=(1,1), padding='same', activation='relu')(x)

x = layers.MaxPooling2D(pool_size=(2,2))(x)

x = layers.Dropout(0.2)(x)

# Third convolution extracts 64 filters that are 3x3

# Convolution is followed by max-pooling layer with a 2x2 window

x = layers.Conv2D(64, kernel_size=(3,3), strides=(1,1), padding='same', activation='relu')(x)

x = layers.MaxPool2D(pool_size=(2,2))(x)

x = layers.Dropout(0.2)(x)

# Flatten feature map to a 1-dim tensor so we can add fully connected layers

x = layers.Flatten()(x)

# Create a fully connected layer with ReLU activation and 512 hidden units

x = layers.Dense(512, activation='relu')(x)

# Create output layer with a single node and sigmoid activation

output = layers.Dense(model_classes)(x) # classification, 4 classes

# output = layers.Dense(1, activation='sigmoid')(x) # binary 0 or 1

# Create model:

model = Model(img_input, output)

model.compile(loss='categorical_crossentropy', metrics=['accuracy'], optimizer='adam')

history = model.fit(

train_generator,

steps_per_epoch= train_image_count / batch_size, # image count above / batch_size = steps

epochs=train_epochs,

validation_data=validation_generator,

validation_steps= validation_image_count / batch_size, # image count above / batch_size = steps

verbose=2)

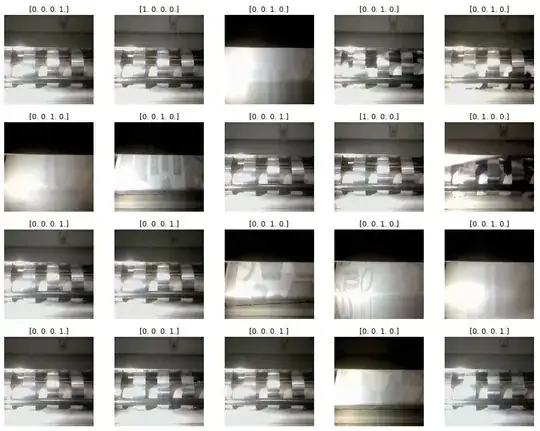

Pictures: These pictures are from the underside of a machine. They are not great pictures especially with that light but the features that distinguish between classes are visible. Paper which is white with colors printed in places is feed in from the top and goes around a belt. The paper can go outside or inside of the metal holders. There is also tissue paper that is put in first and lasts for a long time. The tissue paper is a brown color and has to be running through for the main paper to go through. I have 4 classes described below.

[1,0,0,0] empty > no tissue paper is running, you can see a metal bar going across

[0,0,0,1] waiting > tissue paper is flowing but no paper is going through yet

[0,1,0,0] partial > paper is seen but not fully set or only cut pieces are going in

[0,0,1,0] running > full wide paper is running through either inside or outside of the metal holders.

Below is an example of the photos plotted out with their label.

Usage: If you are wondering this is a sublimation machine. It uses heat and compression to move ink from usually 64 inch wide paper to fabric. This is hand feed above on a table into a big roller and comes out here and turns to go to the back of the machine. sometimes the paper is cut up and feed in as pieces which to me is partial. My goal is to process a photo every couple of seconds to get the state of the machine throughout the day.

This is my first attempt outside of tutorial datasets, using my own data.