I was redirected from StackOverflow because my question is more about theory.

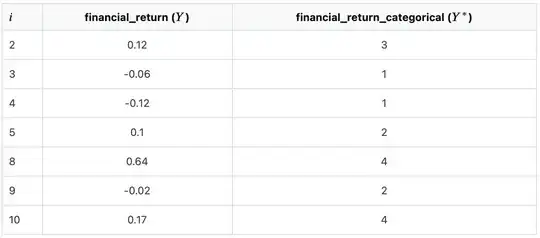

I have a usual set-up with a pandas dataframe with some features and a numeric target variable (financial returns for example). Now I want to make a classification problem out of it: Rather than predicting the numerical value of the return, I want to predict classes. My question deals now about how I do correctly create the classes of the target variable if the creation of the classes are dependent on the data. For example I want to make 4 classes (1,...4) for the target variable based on the quartiles of the target variable. But my believe is, that when I have the full data set, I cannot calculate the quantile on the whole target variable and then make a train/test split afterwards and do a CV on the train set. Because then the calculated quantile values to create the classes are based on the test data as well. So my question is, how can I approach such a task in a sklearn framework? I saw that there exists the class TransformedTargetRegressor which goes into this direction: One could possibly use this together with KBinsDiscretizer for transforming the target variable. But a problem I see there is that it always backtransforms then the classes into numerical values when using .predict etc. but I want to do a classification not predicting numerical values.

Or: Would it be allowed to estimate the quartiles on the whole dataset and then classify the all target observations based on this? - But I would have data leakage problem there right?

Happy for any help.