I intuitively understand why the hazard function is interesting to link to patient attributes, as explained in this answer. However, is there a mathematical reason this is done? I'm particularly familiar with generalized linear models, where the expected value is modeled as being link linear. Is there a connection between the expected value in exponential dispersion families and the hazard function? perhaps through the log partition function somehow?

2 Answers

There are a few relevant factors historically.

At the population level, the progress from describing disease in counts to proportions to rates was based on non-mathematical grounds, but the relationship to the Poisson process does make inference easier. And for the Poisson process the hazard is the same as the rate and related simply to a generalised linear mean model.

The other key motivation is the Cox model, and it's mathematical but in a kind of sociological way. The Cox partial likelihood estimator lets you have an infinite-dimensional parameter in the model but estimate the hazard ratios without estimating the infinite-dimensional parameter. What's more, there was almost no loss of efficiency relative to using a parametric baseline hazard. In addition to this being popular because it looked (a bit misleadingly) like a way of making weaker assumptions in data analysis, it was popular because it was mathematically interesting. People wanted to understand it and to do the same thing for other semiparametric models. We now do understand it, but there are still few or no other examples of efficient estimators in semiparametric models that don't require estimating the infinite-dimensional parameter.

So, mathematically it's not so much the hazard that's interesting as the partial likelihood estimator.

- 21,784

- 1

- 22

- 73

-

Dear Thomas, I appreciate semiparametric theory, but my question is more focused on the content in your first paragraph. If possible, could you provide references concerning the last sentence there on the Poisson process connection and possibly elaborate on it? For a poisson (GAM) model, you could model e.g. $\log \mu_i = f(t_i) + x_i^T\beta$, where $t$ is some covariate, $f$ is an unknown function, and $x$ are other covariates. How does this specification with $\mu$ connect to the hazard rate in Cox regression? – user551504 Sep 07 '21 at 01:43

Just to clarify, can we rephrase the question as follows: "Why is the conditional hazard used as the response in the Cox model?" In which case, I would say that the answer is that this is an assumption about the data: that the impact of the predictors is to act multiplicatively on the hazard function. In other words, the hazard ratio is constant across all values of the predictors.

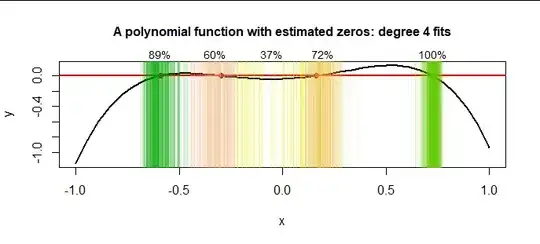

As an example, say we were analyzing survival time T, and included group status (X) as a predictor. Then we make the assumption that there is a baseline hazard function that describes how the hazard changes with time, T. The impact of the predictor X is to scale up or down the entire hazard

function. It could look something like this:

Here are some details from Computer Age Statistical Inference, p144

In summary, in most GLMs, we start with the assumption that the impact of predictors is to act additively on the conditional mean (or on a value that is connected to the mean via a link function). However, in the Cox model, we start with the assumption that the impact of the predictors is to act multiplicatively on the baseline hazard.

As a side note, the Cox model is popular because it allows us to estimate hazard ratios even though we never actually specify the form of the baseline hazard function.

- 510

- 3

- 14

-

2Hi Nayef, I appreciate that these are just different assumptions and think this is a great overview of Cox regression. However, I'm specifically wondering about a broader theory that connects these two classes of models. – user551504 Sep 07 '21 at 01:52

-

Thanks, and I apologize for the misunderstanding. I'm afraid your question is then outside my range of expertise! – Nayef Sep 07 '21 at 01:58