Let's say we want to estimate growth rate from noisy data. Due to noise, simple calculation will result in very poor estimates (calculating growth rates just exacerbates noise), so smoothing is needed.

This is quite straightforward up to this point, but the question is: how to carry out the smoothing? First smooth the data, and then calculate the growth rate, or the other way around, first calculate the growth rate then smooth these values...?

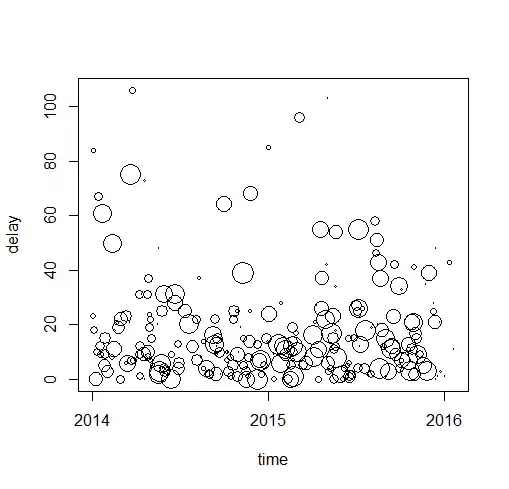

Here is a small illustration. First, we generate a simulated dataset with a sufficiently complex structure and some noise:

library(ggplot2)

library(data.table)

set.seed(1)

SimData <- data.table(x = 1:501)

SimData$y <- sin(SimData$x/30)

SimData$y <- 200*SimData$y - 0.01*SimData$x^2 + 10*SimData$x + 20

SimData$y <- SimData$y + 50*sin(SimData$x/5)

SimData$TrueGR <- log(SimData$y/c(NA, SimData$y[-nrow(SimData)]))

SimData$y <- round(abs(rnorm(nrow(SimData), SimData$y, 50)) + 1)

plot(SimData$x, SimData$y, type = "l")

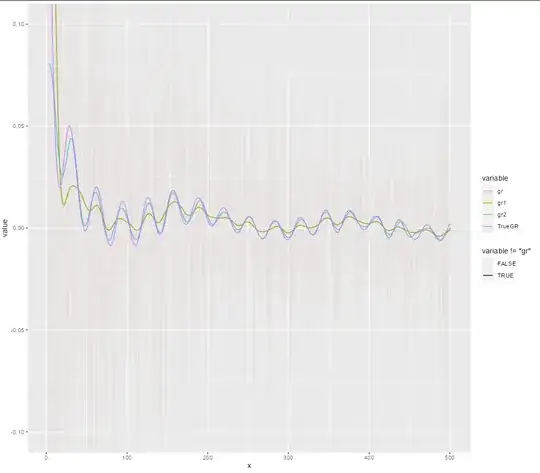

Then we try to estimate the growth rate both ways:

SimData$gr <- log(SimData$y/c(NA, SimData$y[-nrow(SimData)]))

SimData <- SimData[-1, ]

SimData$gr1 <- predict(mgcv::gam(gr ~ s(x, k = 50), data =

SimData), type = "response")

SimData$SmoothY <- predict(mgcv::gam(y ~ s(x, k = 50), data =

SimData, family = mgcv::nb()), type = "response")

SimData$gr2 <- log(SimData$SmoothY/c(NA,

SimData$SmoothY[-nrow(SimData)]))

ggplot(melt(SimData[,.(x, gr, gr1, gr2, TrueGR)], id.vars = "x"),

aes(x = x, y = value, group = variable, color = variable,

alpha = variable!="gr")) +

geom_line() + coord_cartesian(ylim = c(-0.1, 0.1))

It seems that "smooth-first-then-calculate" better picks up the true value, but I don't know whether this is just accidental in this particular example, and a far less know if there is any theory to support either of the approaches.