Say I have 15 data points (x values), for which I have corresponding y values (say randomly generated). For learning purposes, I am trying design a neural network which perfectly matches the input data, i.e. overfits as much as possible. I cant get this done beyond certain point.

Running millions of epochs, designing networks having from 1 to about 4 layers, each layer having from 1 to thousands of neurons..., using ReLU or sigmoid to force non-linearity - all this and it still wont overfit. Am I missing something? Is it even possible when I have only one input?

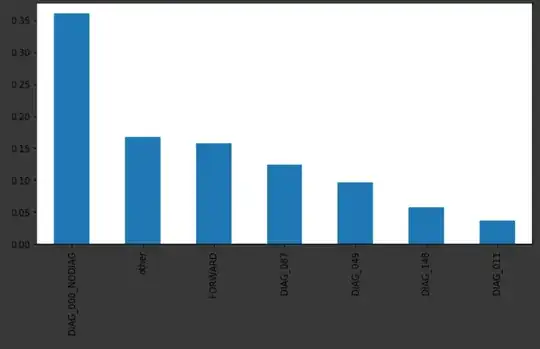

For example, this is the output of NN 1,2048,2048,2048,1 with sigmoids after 200k epochs (practically no change since 60k)

This actually does not look like overfitting at all. Confused, appreciate any pointers. Maybe I need some "funny" activation function?

Update

Thanks for all the comment suggestions. After digging in a bit more, here are my take-aways.

- Use full batch, not mini-batch/SGD. I was however using that, if SGD in torch is fed the entire data set, it is full gradient descent.

- My loss function was MSE, i.e. optimizer was taking mean of all the errors squared, for this purpose just summing the errors works better. Mean sort-of anti overfits here.

- Some dataset might not be perfectly representable by a mathematical function, i.e. there might two identical data points with assigned different results (i.e. 3->9 and 3->7 due to randomness). Obviously this is not possible to capture by NN.