The main answer by Tim tells you about the uniform distribution as conceived in the traditional framework of probability theory, and it explains this by appeal to the asymptotics of density functions. In this answer I will give a more traditional explanation that goes back to the underlying axioms of probability within the standard framework, and I will also explain how one might go about obtaining the distribution of interest within alternative probability frameworks. My own view is that there are generalised frameworks for probability that are reasonable extensions that can be used in this case, and so I would go so far as to say that the uniform distribution on the reals is a valid distribution.

Why can't we have a uniform distribution on the reals (within the standard framework)?

Firstly, let us note the mathematical rules of the standard framework of probability theory (i.e., representing probability as a probability measure satisfying

the Kolmogorov axioms). In this framework, probabilities of events are represented by real numbers, and the probability measure must obey three axioms: (1) non-negativity; (2) norming (i.e., unit probability on the sample space); and (3) countable additivity.

Within this framework, it is not possible to obtain a uniform random variable on the real numbers. Within this set of axioms, the problem comes from the fact that we can partition the set of real numbers into a countably infinite set of bounded parts that have equal width. For example, we can write the real numbers as the following union of disjoint sets:

$$\mathbb{R} = \bigcup_{a \in \mathbb{Z}} [a, a+1).$$

Consequently, if the norming axiom and the countable additivity axiom both hold, then we must have:

$$\begin{align}

1

&= \mathbb{P}(X \in \mathbb{R}) \\[6pt]

&= \mathbb{P} \Bigg( X \in \bigcup_{a \in \mathbb{Z}} [a, a+1) \Bigg) \\[6pt]

&= \sum_{a \in \mathbb{Z}} \mathbb{P} ( a \leqslant X < a+1). \\[6pt]

\end{align}$$

Now, under uniformity, we would want the probability $p \equiv \mathbb{P} ( a \leqslant X < a+1)$ to be a fixed value that does not depend on $a$. This means that we must have $\sum_{a \in \mathbb{Z}} p = 1$, and there is no real number $p \in \mathbb{R}$ that satisfies this equation. Another way to look at this is, if we set $p=0$ and apply countable additivity then we get $\mathbb{P}(X \in \mathbb{R}) = 0$ and if we set $p>0$ and apply countable additivity then we get $\mathbb{P}(X \in \mathbb{R}) = \infty$. Either way, we break the norming axiom.

Operational difficulties with the uniform distribution over the reals

Before examining alternative probability frameworks, it is also worth noting some operational difficulties that would apply to the uniform distribution over the reals even if we can define it validly. One of the requirements of the distribution is that:

$$\mathbb{P}(X \in \mathcal{A} | X \in \mathcal{B})

= \frac{|\mathcal{A} \cap \mathcal{B}|}{|\mathcal{B}|}.$$

(We use $| \ \cdot \ |$ to denote the Lebesgue measure of a set.) Consequently, for any $0<a<b$ we have:

$$\mathbb{P}(|X| \leqslant a)

\leqslant \mathbb{P}(|X| \leqslant a | |X| \leqslant b) = \frac{a}{b}.$$

We can use this inequality and make $b = a/\epsilon$ arbitrarily large, so we have:

$$\mathbb{P}(|X| \leqslant a) \leqslant \epsilon

\quad \quad \quad \text{for all } \epsilon>0.$$

If the probability is a real value then this implies that $\mathbb{P}(|X| \leqslant a)=0$ for all $a>0$, but even if we use an alternative framework allowing infinitesmals (see below), we can still say that this probability is smaller than any positive real number. Essentially this means that under the uniform distribution over the reals, for any specified real number, we will "almost surely" get a value that is higher than this. Intuitively, this means that the uniform distribution over the reals will always give values that are "infinitely large" in a certain sense.

This requirement of the distribution means that there are constructive problems when dealing with this distribution. Even if we work within a probability framework where this distribution is valid, it will be "non-constructive" in the sense that we will be unable to create a computational facility that can generate numbers from the distribution.

What alternative probability frameworks can we use to get around this?

In order to allow a uniform distribution on the real numbers, we obviously need to relax one or more of the rules of the standard probability framework. There are a number of ways we could do this which would allow a uniform distribution on the reals, but they all have some other potential drawbacks. Here are some of the possibilities for how we might generalise the standard framework.

Allow infinitesimal probabilities: One possibility is to relax the requirement that a probability must be a real value, and instead extend this to allow infinitesimals. If we allow this, we then set $dp \equiv \mathbb{P} ( a \leqslant X < a+1)$ to be an infinitesimal value that satisfies the requirements $\sum_{a \in \mathbb{Z}} dp = 1$ and $dp \geqslant 0$. With this extension we can keep all three of the probability axioms in the standard theory, with the non-negativity axiom suitably extended to recognise non-negative infinitesimal numbers.

Probability frameworks allowing infinitesimal probabilities exist and have been examined in the philosophy and statistical literature (see e.g., Barrett 2010, Hofweber 2014 and Benci, Horsten and Wenmackers 2018. There are also broader non-standard frameworks of measure theory that allow infinitesimal measures, and infinitesimal probability can be regarded as a part of that broader theory.

In my view, this is quite a reasonable extension to standard probability theory, and its only real drawback is that it is more complicated, and it requires users to learn about infinitesimals. Since I regard this as a perfectly legitimate extension of probability theory, I would go so far as to say that the uniform distribution on the real numbers does exist since the user can adopt this broader framework to use that distribution.

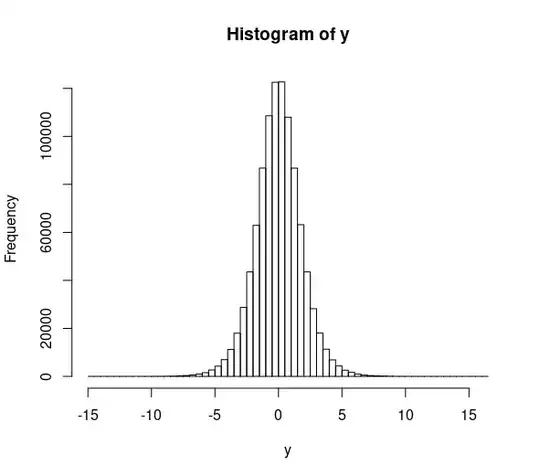

Allow "distributions" that are actually limits of sequences/classes of distributions: Another possibility for dealing with the uniform distribution on the real numbers is to define it via a limit of a sequence/class of distributions. Traditionally, this is done by looking at a sequence of normal distributions with zero mean and increasing variance, and taking the uniform distribution to be the limit of this sequence of distributions as the variance approaches infinity (see related answer here). (There are many other ways you could define the distribution as a limit.)

This method extends our conception of what constitutes a "distribution" and a corresponding "random variable" but it can be framed in a way that is internally consistent and constitutes a valid extension to probability theory. By broadening the conception of a "random variable" and its "distribution" this also allows us to preserve all the standard axioms. In the above treatment, we would create a sequence of standard probability distributions $F_1,F_2,F_3,...$ where the values $p_n(a) = \mathbb{P} ( a \leqslant X < a+1 | X \sim F_n)$ depend on $a$, but where the ratios of these terms all converge to unity in the limit $n \rightarrow \infty$.

The advantage of this approach is that it allows us to preserve the standard probability axioms (just like in infinitesimal probability frameworks). The main disadvantage is that it leads to some tricky issues involving limits, particularly with regard to the interchange of limits in various equations involving non-standard distributions.

Replace the countable additivity with finite additivity: Another possibility is that we could scrap the countable additivity axiom and use the (weaker) finite additivity axiom instead. In this case we can set $p = \mathbb{P} ( a \leqslant X < a+1) = 0$ for all $a \in \mathbb{Z}$ and still have $\mathbb{P}(X \in \mathbb{R}) = 1$ to satisfy the norming axiom. (In this framework, the mathematical equations in the problem above do not apply since countable additivity no longer holds.)

Various probability theorists (notably Bruno de Finetti) have worked within the framework of this broader set of probability axioms and some still argue that it is superior to the standard framework. The main disadvantage of this broader framework of probability is that a lot of limiting results in probability are no longer valid, which wipes away a lot of useful asymptotic theory that is available in the standard framework.