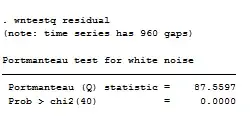

I have repeated measures data from n_subjects where each has n_obs number of measurements before and after some intervention, and there are two experimental groups. I am interested in the influence of group on the change from pre to post so the model looks something like

y ~ time + group:time

and I am interested in the group:time interaction.

time is a numeric column and either 0 or 1 depending on whether it was from the pre or the post timepoint, respectively. group is a factor.

One of the models, that I otherwise like the performance of, has coverage (95% CI) which gets lower for a larger number of observations at each timepoint, but does not change depending on the number of subjects.

(horizontal line is at y=0.95, where the coverage ought to be. There are 150 simulations for each combination of n_subjects and n_obs. Error bars represent Monte Carlo standard error.)

I am struggling to interpret this and understand what can be changed about the model to improve the coverage. What information is the model missing out on that's leading it to be overly precise?

The model is not biased in either direction, and the ModSE/EmpSE is >1 but trending towards 1 for both larger n_subjects and n_obs (as I believe it ought to).

All metrics were calculated by the methods described in Tim Morris' paper here.

More possibly useful details about the model:

The data are log-normally distributed. I am using the gamlss pkg in R and the parameter I'm interested in is the group:time interaction in the sigma model to investigate the effect of group on the change in within-subject variability. The model code is:

model <- gamlss::gamlss(

formula=y ~ time + group:time + re(random=~1|subject),

sigma.fo=~ time + group:time + re(random=~time|subject),

family='LOGNO2',

data=data

)