So basically I've been trying to use CNN for face recognition. And I think that my model is suffering from overfitting since the validation loss is not decreasing yet the training is doing well. For this code I used the Pins Face Recognition dataset and here is the code I used below:

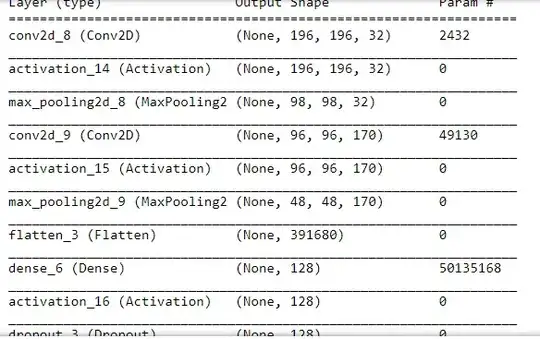

output:

Data preparing

import glob

import os

import numpy as np

import matplotlib.pyplot as plt

def main():

train_data_dir = r'C:\Users\USER\Downloads\cnn-identity-classification-master\data\train'

validation_data_dir = r'C:\Users\USER\Downloads\cnn-identity-classification-master\data\val'

preped_train_data_dir = r'C:\Users\USER\Downloads\cnn-identity-classification-master\data\data_preped\train'

preped_validation_data_dir = r'C:\Users\USER\Downloads\cnn-identity-classification-master\data\data_preped\val'

if not os.path.exists(preped_train_data_dir) and not os.path.exists(preped_validation_data_dir):

os.makedirs(preped_train_data_dir)

os.makedirs(preped_validation_data_dir)

for filename in glob.iglob(train_data_dir+"\**", recursive=True):

#print(filename)

if os.path.isfile(filename): # filter dirs

file_ = filename.split("\\")[8]

file_class = filename.split("\\")[7]

img = cv2.imread(filename, cv2.IMREAD_COLOR)

face, found_face = detect_faces("data\haarcascade_frontalface_alt.xml", img)

if (found_face is True):

face = cv2.resize(face,(200, 200))

if not os.path.exists(preped_train_data_dir+"/"+file_class):

os.mkdir(preped_train_data_dir+"/"+file_class)

#print(preped_train_data_dir+"/"+file_class+"/"+file_)

cv2.imwrite(preped_train_data_dir+"/"+file_class+"/"+file_,face)

else:

print('Did not find any faces!')

for filename in glob.iglob(validation_data_dir+"\**", recursive=True):

print(filename)

if os.path.isfile(filename): # filter dirs

file_ = filename.split('\\')[8]

file_class = filename.split('\\')[7]

img = cv2.imread(filename, cv2.IMREAD_COLOR)

face, found_face = detect_faces("data\haarcascade_frontalface_alt.xml", img)

if found_face:

face = cv2.resize(face,(200, 200))

if not os.path.exists(preped_validation_data_dir+"/"+file_class):

os.mkdir(preped_validation_data_dir+"/"+file_class)

#print(preped_validation_data_dir+"/"+file_class+"/"+file_)

cv2.imwrite(preped_validation_data_dir+"/"+file_class+"/"+file_, face)

else:

print('Did not find any faces!')

def detect_faces(cascPath, img):

faces_data = []

faces_img_data = []

faceCascade = cv2.CascadeClassifier(cascPath)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

faces = faceCascade.detectMultiScale(

gray,

scaleFactor=1.1,

minNeighbors=5,

minSize=(30, 30),

flags = cv2.CASCADE_SCALE_IMAGE

)

face = []

found_face = False

for (x, y, w, h) in faces:

cropped = gray[y-200:y+h+400, x-200:x+w+400]

faces_img_data.append(cropped)

faces_data.append([x-200, y-200, w+400, h+400])

# only returning first face found

face = faces_img_data[0]

found_face = True

if len(faces_data) > 1:

print("found multiple faces!")

return np.array(face), found_face

if __name__ == "__main__":

main()

Training :

from keras.models import Sequential

from keras.layers import Conv2D, MaxPooling2D

from keras.layers import Activation, Dropout, Flatten, Dense

from keras import backend as K

import keras

import uuid

import numpy as np

import os

import json

from PIL import Image as pil

def main():

model, class_dictionary, model_uuid = initialTrain(img_width = 200, img_height = 200, train_data_dir = r'C:\Users\USER\Downloads\cnn-identity-classification-master\data\data_preped\train', validation_data_dir = r'C:\Users\USER\Downloads\cnn-identity-classification-master\data\data_preped\val', model_directory_path = 'data/trainedModels',

epochs = 200, batch_size = 3)

def initialTrain(img_width, img_height, train_data_dir, validation_data_dir, model_directory_path,

epochs, batch_size):

class_dictionary = None

if K.image_data_format() == 'channels_first':

input_shape = (3, img_width, img_height)

else:

input_shape = (img_width, img_height, 3)

# this is the augmentation configuration we will use for training

train_datagen = ImageDataGenerator(

rescale=1. / 255,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True)

# this is the augmentation configuration we will use for testing:

# only rescaling

test_datagen = ImageDataGenerator(rescale=1. / 255)

train_generator = train_datagen.flow_from_directory(

train_data_dir,

target_size=(img_width, img_height),

batch_size=batch_size,

class_mode='binary')

class_dictionary = train_generator.class_indices

model = Sequential()

model.add(Conv2D(32, (5, 5), input_shape=input_shape))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Conv2D(170, (3, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Flatten())

model.add(Dense(128))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(len(class_dictionary.keys())))

model.add(Activation('softmax'))

model.compile(loss='sparse_categorical_crossentropy',

optimizer="SGD",

metrics=['accuracy'])

validation_generator = test_datagen.flow_from_directory(

validation_data_dir,

target_size=(img_width, img_height),

batch_size=batch_size,

class_mode='binary')

model.fit(

train_generator,

epochs=epochs,

validation_data=validation_generator)

if not os.path.exists(model_directory_path):

os.makedirs(model_directory_path)

model_uuid = str(uuid.uuid1())

print("Model id: " + model_uuid)

model.save(model_directory_path+'/model_'+model_uuid+'.h5')

class_indices_file = open(model_directory_path+'/class_indices_file.txt','w')

class_indices_file.write(str(class_dictionary))

class_indices_file.close()

return model, class_dictionary, model_uuid

if __name__ == "__main__":

main()