This set of questions probes your understanding of the relationships among (a) the parameter estimates and (b) their variance-covariance matrix when the explanatory variables in an ordinary least squares (OLS) regression are transformed. Let's begin, then, by discussing this generally. And because this is a self-study question, I won't provide the specifics: that's for you to enjoy working out.

Let the original model be

$$E[y] = X\beta$$

where the columns of the model matrix $X$ are the values of the explanatory variables (perhaps including a constant, or "intercept," term) and $\beta$ is the corresponding vector of (unknown) coefficients to be estimated.

Suppose instead $X= Z\mathbb{A}$ expresses the variables in terms of other variables $Z$ where the matrix $\mathbb A$ represents an invertible linear change of variables with inverse $\mathbb{A}^{-1}.$ Because

$$X\beta = X\,\mathbb{A}^{-1}\mathbb{A}\,\beta = (X\mathbb{A}^{-1})\, (\mathbb{A}\beta) = Z(\mathbb{A}\beta),$$

by writing $\gamma=\mathbb{A}\beta$ for the new parameters the model is expressed as

$$E[y] = Z\gamma.$$

Consequently, when $\hat\beta$ is the OLS estimate of $\beta$ with (estimated) variance-covariance matrix $\widehat{\mathbb{V}} = \operatorname{Var}(\hat\beta),$ the corresponding estimate of $\gamma$ must be $\hat\gamma = \mathbb{A}\hat\beta,$ because both of them yield the same sum of squares of residuals. Moreover, the variance-covariance matrix for $\hat\gamma$ is

$$\widehat{\mathbb W} = \operatorname{Var}(\hat\gamma) = \operatorname{Var}(\mathbb{A}\hat\beta) = \mathbb{A}\operatorname{Var}(\hat\beta) \mathbb{A}^\prime = \mathbb{A}\widehat{\mathbb{V}}\mathbb{A}^\prime.$$

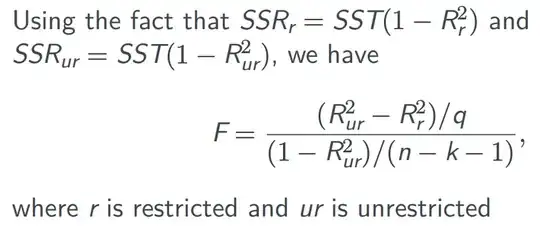

These observations quickly (and easily) yield all the missing values except (G), the $R^2$ for model (3). That can be determined from the $R^2$ and $SSR$ values in models (1) and (3). The idea is that the data in column (1) suffice to determine the variance of $y.$ That and the $SSR$ in column (3) yield its $R^2$ value.

The hardest part is finding the off-diagonal terms of the variance-covariance matrices. This amounts to determining the covariance of $v$ and $w$ (or, equivalently, of $x$ and $z$). Since models (1), (2), and (4) are equivalent and the standard errors (square roots of diagonal elements of the variance-covariance matrices) are given only for (1), there's little hope of finding the correlation from those data alone: thus, the key value must be the standard error of $v$ in model (3). To relate it to model (1), use the fact that $$\operatorname{Var}(v) = \operatorname{Var}(x+z) = \operatorname{Var}(x) + \operatorname{Var}(z) + 2\operatorname{Cov}(x,z).$$

Finally, because all models include an intercept, you may first "take out" the intercept (as usual) by centering all the variables. This reduces your models from three (or two) variables to just two (or one). Moreover, the SSP matrix $X^\prime X$ will then be a multiple of the covariance matrix of the variables. This matrix plays a prominent role in formulas for the parameter estimates and estimates of their variance-covariance matrix: it is what you want to focus on.

Please notice that because the question concerns only estimates and standard errors of estimate, nothing depends on distributional assumptions. Don't even think about Normal, Student t, or $F$ distributions: although you might be able to compute some of the missing values that way, it would be a rather roundabout method (essentially, you would be converting estimates and standard errors to probabilities and then converting back again) and likely quite a bit more complicated than necessary.