I'm building a Siamese Network which should learn a face comparison function. My model consists a CNN (which gets 2 inputs, and yields 2 embedding vectors). With the outputs I calculate:

tf.math.abs(embed[1] - embed[0])

and feed the result to a Dense(1, activation='sigmoid') layer.

The loss function is Binary Cross Entropy.

I'm using the LFW dataset for testing (since its a small dataset) and later on when I find a good model I want to train on the CelebA dataset.

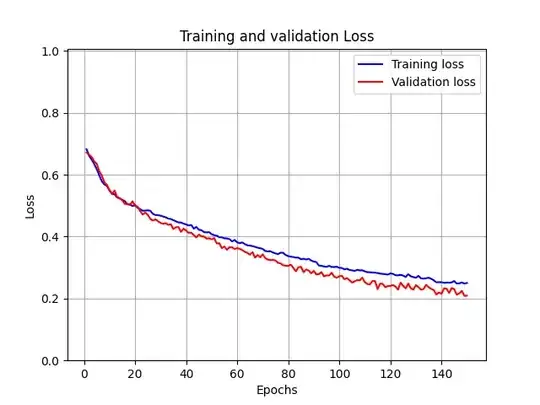

At first I was trying to find a good model (which combination of layers works best) and I found out that actually having a smaller model prevents overfitting and just resulting with better scores (lower loss, and higher accuracy on both training and validation sets: ~94% accuracy).

After Training the model, I was testing it with some images which was taken by me with the phone camera. I got around 57% accuracy (for around 20 samples). only after having 70 samples I got a little bit better accuracy (max 77%).

It wasn't satisfying enough, so I read somewhere that Image Augmentations could help solving it.

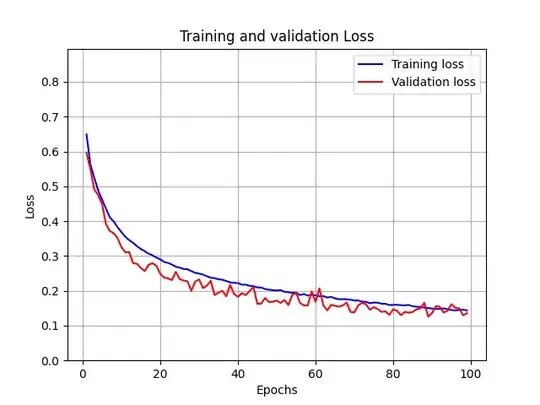

After adding Augmentations: HSVRandom Noise, random Brightness, random Contrast, random rotations, random zoom, random shifts, I got even better results on the training and validation sets (at this point I didnt measure the accuracy, only the loss, which decreased from 2.11 to 1.5, which is great), but when I tried to test the model on the my images, the accuracy dropped even more.

I got 68% accuracy (from 77%).

I cant tell what should I do now. How could I improve the model so the accuracy would be better than only 77%, if augmentations as HSV and contrast changes doesnt help to the robustness of the model.

NOTE: I added the augmentations using Layer classes, so the process of learning will be faster. I couldn't find a way of removing them after the .fit(), so if anyone knows how to remove them, I would appreciate the help.

Below an Image of the whole model (including the Augmentation layers tensorflow offers, with 2 custom ones (RandomHSV and RandomBrightness)