Good precision, recall, and F1 score values will highly depend on your application. For example, if you are trying to detect cancer cells in patients, you may be more interested in the number of false negatives than the number of false positives. A false negative could lead to the death of a patient, while the worst that can happen in the case of a false positive is an upset patient.

Since you care more about minimizing false negatives than minimizing false positives, you would pay more attention to recall than precision. In other applications, false positives may be more important than false negatives. Therefore, you should check for typical values in papers in your application area.

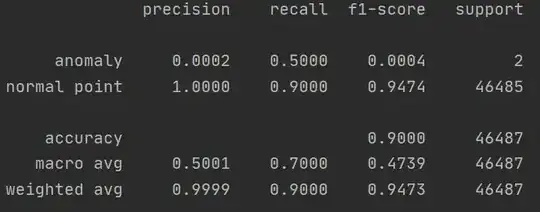

Since you are evaluating the performance of an anomaly detector, there exists two classes: anomalous and non-anomalous. This is a binary classification problem, so I am not sure how macro and weighted averages are relevant here.

In case I am wrong and this is a multi-class classification problem, deciding whether to use the macro average or weighted average will again depend on what is normally done in your application area. This answer provides an informative overview.