$X$ is the random variable. The quantity you are taking the expectation of can be viewed in two ways: as written, it looks like the unit vector pointing from $X$ to $x.$ One route to a simple solution is to negate this, so that it is the unit vector pointing from $x$ to $X.$ This negation changes nothing, because it factors out of the expectation and disappears when the norm is taken. Thus, $(x-X)/|x-X|$ projects $X$ onto the unit sphere centered at $x.$

(In physical terms: I am inviting you to conceive of this setting not in terms of some fixed frame from which to examine $X,$ but in terms of a moving frame attached to $x.$)

Think, then, of $X$ as a probability "cloud" of points, like a galactic nebula, and $x$ as a starship receding from the cloud (and perhaps orbiting it at the same time). The starship will always be looking backwards at the point whence it came. (Physically, this determines a special set of moving reference frames useful for the analysis.) From the starship's perspective, the cloud is shrinking to the image of the ship's starting point in the viewfinder (the unit sphere). This implies the projection of the cloud onto a unit sphere around the ship's telescope is converging towards the unit vector pointing back at the ship's point of origin, whence the length of its (vector average) will approach $1.$

One technical problem is that the cloud could extend throughout all of space. How to handle that? The key is that most of the cloud will still lie near the center of the ship's viewfinder, once the ship has traveled far enough outward. The rest of the cloud, which could lie at arbitrarily great distances, projects down to a distribution of vectors that could be scattered all over the unit sphere, but they all are unit length $1$ (by construction). Their mean will be a vector $z$ of length even less than $1$ and, since this eventually contributes an arbitrarily small amount to the expectation, it can be neglected.

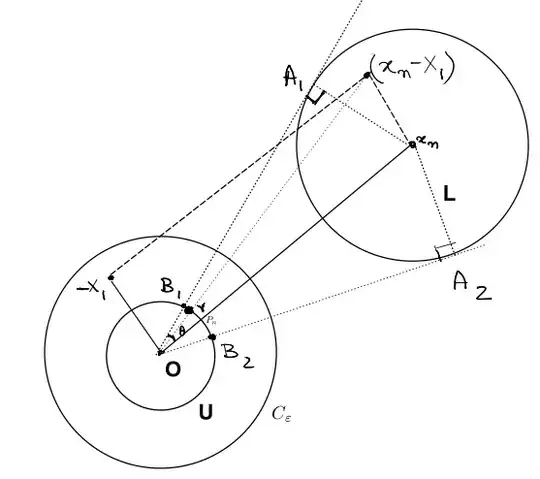

The method of proof should now be clear. When $\delta \gt 0$ is an arbitrarily small angular distance (from the starship's perspective) and $\epsilon\gt 0$ is that proportion of the $X$ cloud, as seen from $x,$ which might lie beyond an angular distance $\delta$ of some fixed reference point $O$ (which we might as well take to be the origin), then almost all the values of $(X-x)/|X-x|$ are very nearly parallel to the vector from $x$ to $O$ (namely, $-x$) and their contribution to the expectation will be close to the unit vector in that direction. Since $\epsilon$ is tiny, the projection of the rest of $X$ doesn't change that expectation much. Thus, the norm of the expectation approaches the norm of the unit vector from $x$ to $O,$ which is always $1,$ QED.

This proof can be carried out formally in many ways. The following proceeds according to the foregoing plan. Thus, let $\epsilon \gt 0$ and $\delta\gt 0$ be arbitrary small numbers; let $r$ be the smallest radius for which $\Pr(|X| \le r) \ge 1-\epsilon,$ and choose $x$ such that $|x| \ge r\csc(\delta).$ This guarantees that when $|X|\le r,$

$$\left|y\right| \le \sin(\delta)\lt \delta\tag{*}$$

where

$$y= \frac{x-X}{|x-X|} - \frac{x}{|x|}.$$

($y$ is the vector displacement between $X$ and the origin in the ship's viewfinder.)

If necessary, adjust $\epsilon$ downwards to $\epsilon^\prime$ so that $1-\epsilon^\prime= \Pr(|X|\le r).$ This is needed to deal with non-continuous distributions of $X.$

Writing the expectation in terms of expectations conditioned on the events $|X|\le r$ and $|X|\gt r,$ we have

$$E\left[\frac{x-X}{|x-X|}\right] = (1-\epsilon^\prime)\left(\frac{x}{|x|} + y\right) + (\epsilon^\prime)z$$

where $z$ is the conditional expectation of $(x-X)/|x-X|$ for $|X|\gt r$ and therefore $|z|\le 1.$ Taking norms of both sides (note that $|\,x/|x|\,|=1$) and applying the triangle inequality along with the inequality $(*)$ yields

$$(1-\epsilon^\prime)(1-\delta) - (\epsilon^\prime)(1) \le \Bigg| E\left[\frac{x-X}{|x-X|}\right]\Bigg| \le (1-\epsilon^\prime)(1+\delta) + (\epsilon^\prime)(1).$$

Since both $\epsilon^\prime \le \epsilon$ and $\delta$ can be made arbitrarily small, both bounds shrink to $1,$ proving $$1 = \lim_{|x|\to\infty} \Bigg|E\left[\frac{x-X}{|x-X|}\right]\Bigg|,$$ QED.