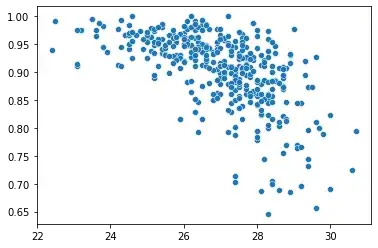

I'm trying to fit a neural net to a pretty simple 1-variable regression problem. My output is a probability and the input is a continuous feature. The association is clearly negative. The below gives

X = np.array(impute_df['runner1_speed'])

y = np.array(impute_df['dest_1__'])

sns.scatterplot(x=X, y=y);

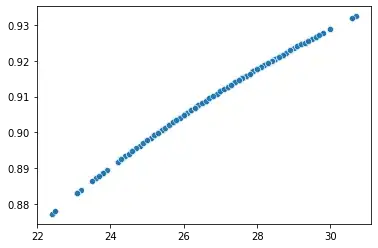

But with the simple net below, the fitted values are positively associated with the predictor.

inputs = keras.Input(shape=(1,))

hidden = layers.Dense(16, activation="relu")(inputs)

outputs = layers.Dense(1, activation="sigmoid")(hidden)

impute_mod = keras.Model(inputs=inputs, outputs=outputs, name="impute_mod")

impute_mod.compile(

loss=keras.losses.MeanSquaredError(),

optimizer=keras.optimizers.Adam(learning_rate=0.001))

history = impute_mod.fit(X, y, batch_size=4, epochs=20, verbose=0)

sns.scatterplot(x=X, y=impute_mod.predict(X)[:,0])

I must be doing something stupid but I can't figure out what. Any ideas? Thanks so much!