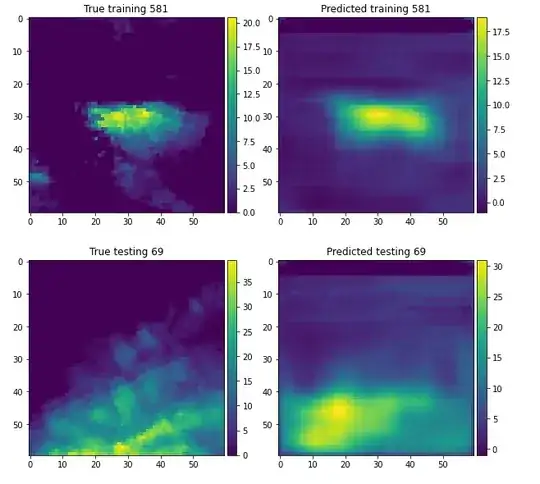

Currently, I am using a U-Net with skips to predict images. These images are based on data from 30 minutes prior. Most of the true image is filled with 0, with a range of approximately [0,50]. The network predicts low, non-zero values everywhere, since this apparently is not heavily penalized by the loss function. I am trying to figure out how to modify the network as to get around this problem, by creating a custom loss function. Or some other way?

I am also working on modifying the data, so that I can use a classification scheme instead of regression. However, I would really prefer to use regression here. I am also experimenting with normalizing the data between [0, 1].

Further info: For regression, I am using MSE as loss. The network appears to learn on the training data fairly well (as training loss falls), but reaches a limit on validation data. No form of regularization has caused the network to cease overfitting. I'ved used L2 reg, L1 reg, and dropout.