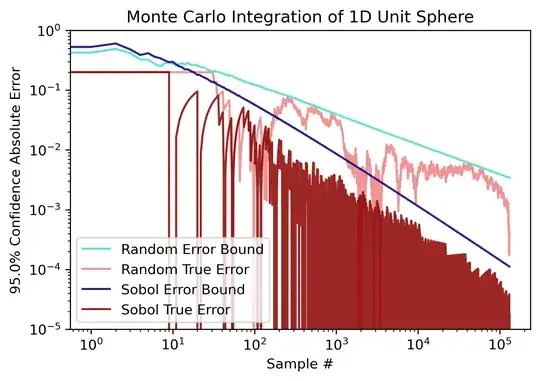

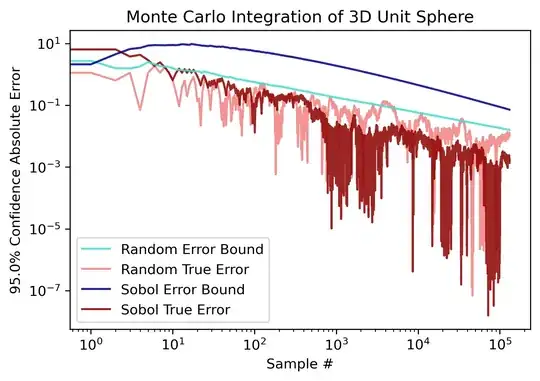

My understanding is that QMC integration using random sampling will converge with $O(\frac{1}{\sqrt{n}})$, while using Sobol's sampling will converge with $O(\frac{(\log{n})^d}{n})$. However I'm having trouble converting this big O notation into concrete error bounds.

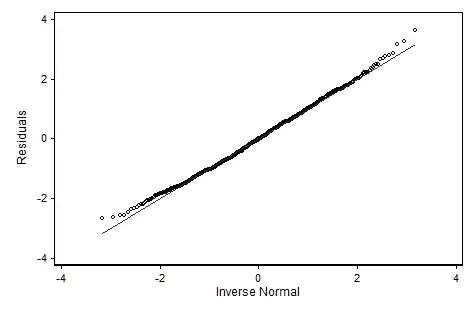

This source gives an equation for calculating error bounds when random sampling. Given a set of random samples with variance $\sigma^2$ drawn from volume $V$ and a chosen confidence level $\gamma \in (0,1)$, we can find the bounding integration error from $\epsilon_\gamma = V\sigma\Phi^{-1}(\gamma)\frac{1}{\sqrt{n}}$, which follows from the central limit theorem.

Is finding the error bounds when Sobol sampling just as easy? Should I be using this? $\epsilon_\gamma = V\sigma\Phi^{-1}(\gamma)\frac{(\log{n})^d}{n}$

In my empirical tests of 1-dimensional sequences it seems to be a reasonable (if anything, too high) upper bound, but I want to make sure I'm theoretically grounded here since higher dimensions are harder to test.