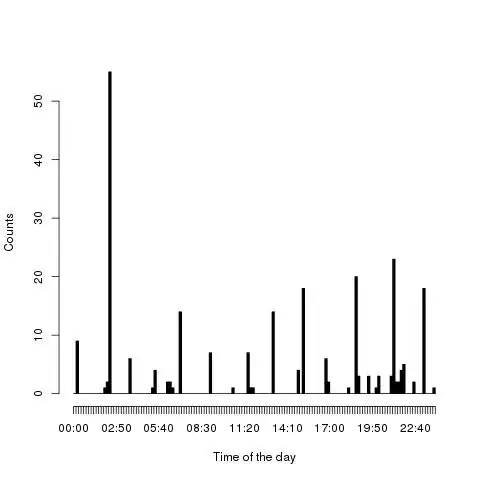

I am training a LSTM for regression problems, but the loss function randomly shoots up as in the picture below:

I tried multiple things to prevent this, adjusting the learning rate, adjusting the number of neurons in my layers, add l2 normalization, use clipnorm or clipvalue for the optimizer, but nothing seems to help. I checked my input data to see if it contains null/infinity values, but it doesn't, it is normalized also. Here is my code for reference:

Each input data is a sequence, whose shape is (100,2) while the output is (100*1) sequence. I don't know how to deal with such exploding cases. It works fine for easy regression cases, but when I add the complexity of the regression problem (similar to predict a sine wave, the easy case is only the amplitude is different, but the complex case is frequency, amplitude and phase are all different). Appreciate any help!

PS: I have tried to change each hyper parameter and it seems useless. And I try to apply the data from simple sinewave it works fine.