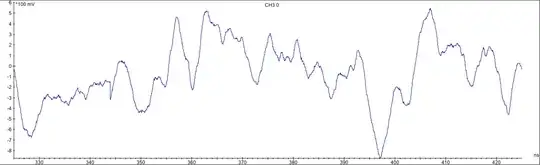

As part of an experiment, I need to collect time-series samples which are tightly associated with some input data. I send this data to an external device, and then collect the associated trace using my measurement equipment. An example trace:

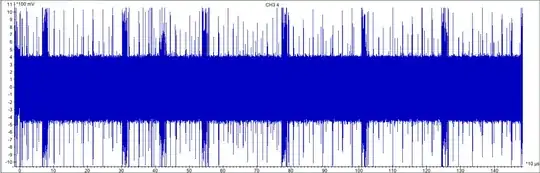

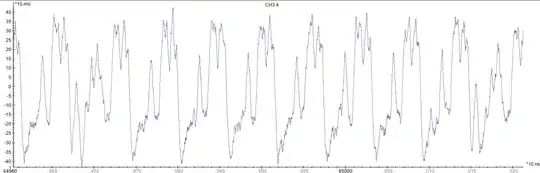

However, I need to collect a lot of these traces for the experiment to be successful. I've found that the best way to speed up data collection is to send a random seed to the device, which will then generate many input data on-device and run the target function on this data multiple times in succession. Then, I simply collect a much longer trace which contains the target subpattern multiple times -- this trace contains 25k subtraces for the target operation (zoomed in below), for example:

Now, I enter the postprocessing phase for my data. My goal is to separate out the repeated subpatterns, and associate them with the correct data generated from the random seed. For further steps in the data analysis, it is critical that each subpattern is matched exactly with its correct input data. However, I'm finding it difficult to come up with a technique to robustly split the larger trace into its component subpatterns. I have the following assumptions I can work with:

- I know the exact number of subpatterns that appear in the overall trace.

- Subpatterns-of-interest are approximately evenly spaced.

My current approach focuses on calculating the Pearson correlation between candidate subpattern and a manually selected reference subpattern. For high correlation values, I can be reasonably sure that a subpattern-of-interest occurs there. However, there is also some noise in my overall trace, leading to low correlation values for the subpatterns at that position. Therefore, I need to find some method with which I can robustly estimate how many subpatterns occur in the noisy regions, so that I can continue to correctly derive the input data for any following clean subpatterns.

As someone who's not particularly trained in statistics, I'm specifically asking these questions:

- What are some specific techniques I could look into to optimize this pattern matching-based postprocessing solution, and correctly derive the associated data?

- What are some ways in which I can formally split these traces apart? All of my approaches so far have felt overly heuristic -- I'm interested in what some of the typical mathematical formalism for this looks like.

- Are there better, non-pattern matching-based approaches I could use for something like this?