In a single dimension Gaussian, the variance $\sigma$ denotes the expected value of the squared deviation from the mean $\mu$.

I am trying to understand why in the multivariate case of modeling variable $\mathbf{x}$ we end up having a matrix $\Sigma^{-1}$. Why not instead of a vector which in each dimension shows the variance of the input variable $\mathbf{x}$.

From Wikipedia the 2d Gaussian function is represented as:

$f(x,y) = A \exp\left(- \left(\frac{(x-x_o)^2}{2\sigma_X^2} + \frac{(y-y_o)^2}{2\sigma_Y^2} \right)\right)$

Why not use a form like that for the multivariate Gaussian with $\mathbf{\sigma} = [\sigma_{X} \ \sigma_{Y}]^{T}$? Given than my vector $\mathbf{x} = [x \ y]^{T}$.

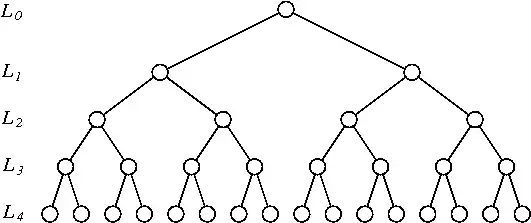

How this is interpreted in the following example: