I have been using LSTM multi-output Neural Nets to perform two tasks, regression coupled with a classification. The data is in a time-series format where my dependent variable is trade quantity between nations as well as an indicator as to whether they trade at all. The problem I'm running into, is that my network predicts only a single value for the regression (~0.36), which is not the mean for the training data, and only 1's (100% probability for each sample) for the classification.

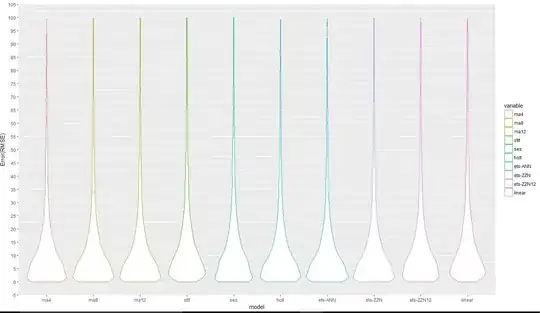

The normalised data distributions for training, validation and testing look like this

For the classification problem there is about a 5:1 ratio of 1's to 0's but nothing excessively imbalanced. My network setup looks like this, but I would usually pass it through a genetic algorithm for hyper parameter tuning.

callback = tf.keras.callbacks.EarlyStopping(monitor='loss', patience=3, min_delta=1e-9)

inputs = keras.layers.Input(shape=(x_train.shape[1], x_train.shape[2]))

layer = LSTM(128, input_shape=(x_train.shape[1], x_train.shape[2]), return_sequences=True)(inputs)

layer = keras.layers.Dropout(0.2)(layer)

layer = LSTM(64, return_sequences=False)(layer)

layer = keras.layers.Dropout(0.2)(layer)

layer = Dense(32, activation='relu')(layer)

partner_layer = Dense(1, activation='softmax', name='partner_pred')(layer)

trade_layer = Dense(1, name='trade_pred')(layer) # No activation due to regression, possible sigmoid as values are between 0 and 1.

model = keras.models.Model(inputs, [partner_layer, trade_layer])

model.compile(loss={'trade_pred': 'mse', 'partner_pred': 'binary_crossentropy'}, optimizer=Adam(learning_rate=0.009),

loss_weights={'trade_pred': 1, 'partner_pred': 10},

metrics={'trade_pred': 'mse', 'partner_pred': 'accuracy'})

history = model.fit(x_train, {'trade_pred': y_train[:, 0], 'partner_pred': y_train[:, 1]}, epochs=int(300), batch_size=int(512), validation_data=(x_val, {'trade_pred': y_val[:, 0], 'partner_pred': y_val[:, 1]}), verbose=1,

shuffle=False, callbacks=[callback])

Am I forgetting something in the network structure? I already tried fiddling with the weighting of the loss functions, but to no avail. Still the same prediction.